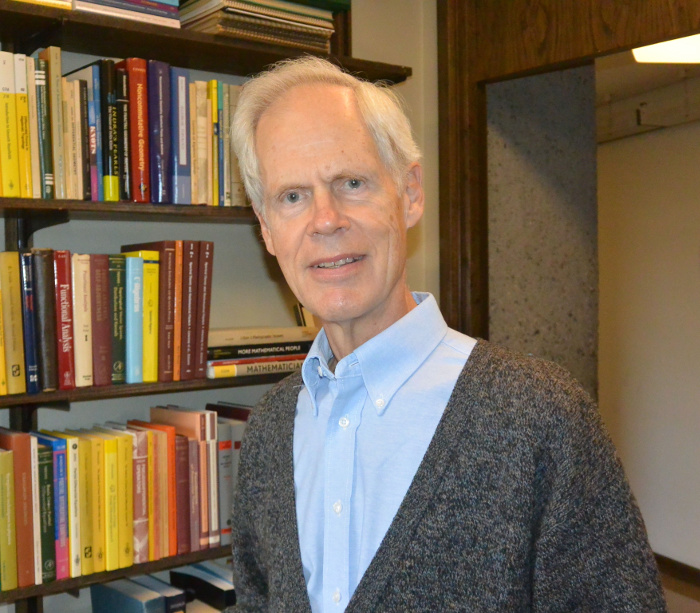

Gerald Folland is Professor Emeritus in the Department of Mathematics, University of Washington at Seattle, USA. He is a renowned analyst and is the author of many textbooks on advanced analysis. He is a regular visitor to research institutes in India; having first come to the country in 1981, he now considers India his second home. Much of this interview was initially done by Devendra Tiwari and his colleagues in December 2015 at Delhi University. The present version has been extensively revised and updated with inputs from Folland.

Growing Up

We would like to begin our conversation by talking about your initial years, and what made you choose mathematics as a career. Was mathematics the sole obvious choice? What were your interests while growing up?

GF: I was born and raised in Salt Lake City, Utah. My parents were both teachers at the University of Utah, my father in English and my mother in music. They were both born in Salt Lake City too, but they each spent several years in other parts of the country before they returned and married. Since they married late, I have no brothers or sisters, so I’ve always been good at finding my own path.

I was interested in science from my childhood, and my parents were always supportive even though they didn’t share this inclination. My first idea of what I wanted to do when I grew up was astronomy. When I was six years old, somebody gave me a little book about stars and planets meant for children. I thought that was very interesting, and I had the ambition to discover a comet.

What lit the fuse was plane geometry

Do you continue to enjoy astronomy as a hobby?

GF: I still think astronomy is very interesting. Actually, it is more interesting now than it was earlier, because of the Hubble telescope and all the other great advances in the observational apparatus that we can use to learn about the universe.

Did you have a telescope available during your childhood?

GF: Yes. And then a few years later I learned about chemistry and physics, but I didn’t yet think about mathematics. I mean, maths was arithmetic at that time of my life, and it wasn’t very exciting. What lit the fuse was plane geometry, which I learned as it was traditionally taught, almost straight out of Euclid’s Elements. Reasoning one’s way from point A to point B: that was great fun. From that point on I always felt that mathematics would be central in my professional life, though it wasn’t yet clear to me that I should do pure maths in an academic setting rather than research in some other environment. After my first year in graduate school I had a summer internship at Bell Laboratories, where they did research related to telecommunications. I enjoyed it, but after that I knew that I belonged in academia.

Research

Your main research interest seems to be in analysis. When did you realize that analysis, instead of geometry, is what you want to pursue?

GF: There was no sudden transition, it was sort of gradual. Analysis was one of the things I was very much interested in, but there was no moment when I said “Aha, I am an analyst.” In fact, my thesis problem had a geometric component. It had to do with differential forms, which are a part of differential geometry. But gradually I realized that although geometry is interesting, analysis is what I can do better.

How did you choose your thesis topic?

GF: When I was ready to start thinking about writing a thesis, my advisor J.J. Kohn at Princeton University, suggested a problem that was sort of perfect for me. It was at the intersection of harmonic analysis, partial differential equations, and several complex variables. It was a lovely combination of things.

The idea is this: Suppose, in complex n-space, you have a real hypersurface. If you take the Cauchy–Riemann equations and throw away the normal component to the hypersurface, you get a complex of differential operators that live on the hypersurface, called \bar{\partial _{b}}. Unlike the ordinary exterior derivative, that is, the de Rham complex, this complex is not elliptic. Basically this is because you have cut down the real dimension by one, so in the hypersurface you have n-1 complex directions and one real direction left over, and that one real direction behaves differently from the others. That direction turns out to be non-elliptic, but if you make some appropriate curvature assumptions then you still get some control. And my thesis was to investigate how this works when the hypersurface is the unit sphere, because then you have the unitary group U(n) that acts on everything. My problem was to find out how things decompose under the action of the unitary group and use this to do explicit calculations. One of the things that I tried was to find at least an approximate fundamental solution for the Kohn–Laplacian of this complex. Elias Stein gave me an idea, which I tried, but it didn’t work. The error term was too big to control.

So Stein was at Princeton when you were working on your thesis?

GF: Yes, Stein was there, and he helped me when I got stuck on Fourier analysis. At that time, I didn’t know whether his idea for the fundamental solution would work or not. But I couldn’t make it work, so I came back to it a year later in 1972 and proved that the error term is definitely too big to control. At that time I was at New York University and Charles Fefferman, who was on the faculty at the University of Chicago then, came to NYU to give a talk, and I told him about this negative result that I had just proved. He said, well, maybe the unbounded picture works better. You can take the unit ball and open it up into a generalized upper half plane, and the boundary of that upper half plane is the Heisenberg group. So, I calculated the fundamental solution on the Heisenberg group and it worked just perfectly. Then Stein and I wrote a big paper on the Kohn–Laplacian, two years later.

Could you tell us what is the Kohn–Laplacian operator?

GF: The Kohn–Laplacian is a differential operator, somewhat similar to the ordinary Laplacian, that arises when you study boundary values of analytic functions of several variables; so the original motivation came from function theory.

Also, this is a nice example, or family of examples, of non-elliptic operators that still have some regularity properties that are similar to the elliptic ones, but not quite as strong. The Kohn–Laplacian has been used as a model case for studying other operators of that type.

While reading your paper,1 we are struck by the ingenuity you employed before proposing the fundamental solution of the Kohn–Laplacian.

GF: I was just very lucky. Charlie Fefferman suggested that maybe you can do an exact computation if you work on the Heisenberg group instead of the surface of the ball, so I tried and it worked. But I was lucky that I was using the right coordinate system on the Heisenberg group. The coordinates were chosen so that the commutation relation takes a particular form. If I had used slightly different coordinates, the commutation relation would look a little bit different, and then it would have been the wrong thing for finding the fundamental solution. So that was just a very nice discovery.

Later on, we asked if we can compute an exact fundamental solution for the square of the Kohn–Laplacian, but that turns out to be a much harder problem. The answer is now known (I didn’t discover it) and it is not simple.

Is there any other operator as useful as the Laplacian? What makes it so powerful and versatile?

GF: I do not think that there is any other operator that is more important than the Laplacian, because the Laplacian is (up to scalar multiples) the unique second-order scalar differential operator that commutes with translations and rotations, so it turns up whenever you model phenomena with those symmetries. If you look at operators on vector-valued functions, there are others, such as divergence, gradient, and curl.

What was your association, both professional and personal, with Stein like?

GF: When I was writing my thesis, Kohn was the one who kept me busy with questions, and he was the one who suggested the problem that I worked on in the first place. I would make some progress, find out something, and he would say, “Now what about this other thing?” But whenever I had a question about Fourier analysis, I would ask Stein. Then a year after I got my PhD, Stein and I both were at a summer institute on harmonic analysis on homogeneous spaces. I had recently computed the fundamental solution of the Kohn–Laplacian on the Heisenberg group, and he suggested to me that we should collaborate using that as a starting point to develop a good theory for strongly pseudoconvex domains. So we wrote that ninety-page paper.

Then a few years later I was interested in the real variable theory of Hardy spaces and wanted to see how it would work on nilpotent Lie groups with dilations. I spent part of the year at the Institute for Advanced Study in Princeton and so I had the chance to talk with Stein about Hardy spaces, and that became my second collaboration with him. It was a great experience. Stein has done a lot of joint work with a lot of people and he is very good at it—very good at using people in the best possible way. We were not intimate friends but we have been good friends.

Stein has done a lot of joint work with a lot of people and he is very good at it

Who were your heroes during these early years of your career?

GF: Certainly Eli Stein. But at Harvard, the people I interacted with the most were Lynn Loomis, who was a fine teacher, and Raoul Bott, who was a wonderful geometer and a wonderful person.

Your first publication was on Weyl manifolds. Was it related to what you worked on for your PhD thesis?

GF: It is in differential geometry, and it was my undergraduate thesis. I wrote the first version of it at Harvard and revised it in my first year as a graduate student. At that time I was not quite sure what I wanted to work in; I was interested in both geometry and analysis.

What exactly was Weyl’s idea, and why is it called a Weyl manifold at all?

GF: Hermann Weyl had an idea for doing a unified field theory, in the sense of Einstein, to unify electromagnetism with gravity. In the case of gravity, you have a Lorentzian manifold—let us think of it as a Riemannian manifold instead since this is a little bit easier. But you don’t really have a Riemannian metric because there is no canonical unit of length. You can use metres, you can use miles, it doesn’t matter. So instead of a Riemannian manifold, you have what is called a conformal manifold, where at each point you have a metric determined up to a positive scalar multiple. That gives you a so-called line bundle—that is, at each point, you have a line of metrics.

Now, Weyl said, maybe the unit of length at one point doesn’t determine the unit of length at other points completely, and if you take a yardstick at one point and you move it to a different point, the unit of length you get at the new point depends on the path you took. He developed a structure where, to compute the length at the new point, you integrate a certain 1-form over the path and exponentiate, and that 1-form should be the electromagnetic potential. In other words, you put a connection on this line bundle, which gives you a way to parallel-transport lengths, and that connection could be the electromagnetic potential. It has the right kind of gauge invariance; the electromagnetic potential is only determined up to within an exact differential, and that’s fine because if you integrate an exact differential around a closed curve you get zero.

It was a very nice idea, but it didn’t work, because there is absolutely no experimental evidence that if you take your yardstick and move around any closed path, it could come back with a different length. And then, a few years later, Weyl found that his idea could be made to work by thinking about phases instead of lengths. Changes in the length gauge are multiplicative, so they are given by the exponential of the integral of a 1-form. If you look at the exponential of i times the integral of the 1-form instead, you are changing a phase factor instead of a length. And then it connects with the quantum mechanical wave function, and you get the gauge invariance that is appropriate to electromagnetism in quantum mechanics.

The Relation between Mathematics and Physics

When you were a student, was the general theory of relativity a part of the undergraduate curriculum?

GF: No, it was certainly not a part of the undergraduate curriculum, except in a very limited sense, though the special theory of relativity was part of the standard curriculum. But I tried to read about general relativity as an undergraduate by myself.

One of the things that still amazes me about progress in science is the time-delayed confirmation of general relativity. When Einstein developed the general theory of relativity, the experimental evidence was very meagre. There was an anomaly in the orbit of Mercury and there was the fact that the sun bent light rays, which you could see during an eclipse. That was all. Now, of course, the Hubble telescope shows pictures of galaxies that get multiply refracted around other galaxies, and GPS devices require the general theory of relativity to work properly because time runs a bit faster up where the satellites are than here on the Earth’s surface. That kind of delayed confirmation of the theory is very interesting to see.

Are there such applications of special relativity too?

GF: Well, special relativity is part of the basic fabric of particle physics. Down at the level of elementary particles, time dilation and space contraction and E=mc^{2} are not something magical but are everyday facts of life. For example, time dilation—that is, the fact that if something is going faster, then its clock runs more slowly as observed by us—is why muons created by cosmic rays in the upper atmosphere can reach the surface of the Earth. The half-life of a muon is something like a millionth of second and the distance they come down from is about one ten-thousandth of a light-second. So it would seem that none of them would be able to reach the Earth’s surface. But those that are going really fast run on a slower clock and survive to reach the surface. So, in this way, relativity has just become part of working physics.

Does your work on partial differential equations have applications anywhere in the natural sciences?

GF: Not directly. There are a lot of partial differential equations that are interesting on their own. Regarding my work, it depends on what you want to call an application. After my first year in Princeton, I spent the summer working at Bell Labs, and they gave me a problem to work out about an ordinary differential operator coming from the theory of dielectric waveguides. Usually, you make the approximation that there are no heat losses in the waveguide. Now if you assume that there is some heat loss and build a mathematical model for that, you get a non-self-adjoint differential operator for which you want to do spectral theory. And most of the time it works nicely, with a little modification, and you get what you expect.

But there is a strange phenomenon that can happen in principle. Typically, you have finitely many negative eigenvalues and continuous spectrum on the positive real axis. But if it is not self-adjoint, the eigenvalues are not real anymore. The continuous spectrum still turns out to consist of [0, \infty), but eigenvalues can wander around in the complex plane and fall into the continuous spectrum. If they do this, they are not eigenvalues any more, but they produce some bad behaviour in the spectral resolution of the continuous spectrum. So you do not have standard spectral theory: if you try to write out something like the Fourier inversion formula for this situation, there is a singularity that you have to deal with. I wrote a couple of papers on that where I found a nice way to deal with the singularities. So that is something definitely directly motivated by a physical problem.

Now, if you ask, are my theorems really interesting to the physicists? No, I don’t think so. They provide the mathematically rigorous way of dealing with slightly weird situations, but it is not something the physicists would worry about.

Do you agree with the general criticism that mathematicians have of physicists’ way of applying mathematical tools in their own work?

GF: The most serious criticisms come when the physicists do some things that at present are not mathematically respectable. But somehow it works, which means that there is probably a valid mathematical theory in there that is waiting to be invented. You have to realize that mathematical analysis was done in the past in a way that doesn’t meet the standards of present-day mathematics. For example, Euler did some things that were just as un-rigorous as some of the things physicists do. Since he was smart, he got it to work.

Mathematical analysis was done in the past in a way that doesn’t meet the standards of present-day mathematics

What is your opinion, in general, on the acceptance of new ideas and concepts that come from physical theories—such as the Dirac delta function?

GF: Dirac was not the first person to use these things. Some of these ideas go back to Heaviside in the 1880s in his operational calculus. On the other hand, each era has its own idea of what is acceptable and what is not. It took people a long, long time to accept the complex number system, but now for us it’s absolutely routine. The first place I know where complex numbers appeared in the literature is back in the sixteenth century when they discovered the solution for a general cubic equation, which has the peculiar property that if all three roots are real and distinct, the formula involves imaginary numbers. And that really bothered people involved in the discovery of the formula. Then, later on, Euler used imaginary numbers, but he also used the word “imaginary” and meant it to be taken literally—that is, unreal. It was not until around 1800 or later that people realized that you could represent complex numbers as points in a plane. Without that conceptual help, there was no hope of general acceptance. From the present point of view, once you say that complex numbers are ordered pairs of real numbers, then everything is fine. But that was not so clear for a while, and it took another fifty years for complex numbers to be generally accepted.

Quantum Mechanics

You have studied the Heisenberg group too. Was it the reason for your interest in quantum mechanics?

GF: I got interested in quantum mechanics early, in my undergraduate days; though I never took any course in quantum mechanics, I just read about this subject on my own.

Is it true that at the time quantum mechanics was invented, the relevant mathematics was available but physicists were not acquainted with the subject very well and were therefore very puzzled for some time?

GF: Well, some of the mathematics was available—the theory of L^2 spaces and the methods for solving a lot of the relevant differential equations—but abstract Hilbert spaces and unbounded operators on them hadn’t been studied yet. The physicists weren’t quite ready to worry about such things; they had their hands full figuring out the physics without putting a lot of thought into the mathematical technicalities. The ideas in some of the early papers were not yet clarified; for example, Heisenberg’s first paper is very hard to read. Heisenberg was not a mathematician, and it was really Max Born and Pascual Jordan who looked at his papers and saw what he was doing mathematically. I think they were the ones who pointed out that his matrices satisfy the canonical commutation relation, which they recognized because it has a classical-mechanical analogue—the Poisson bracket relation for position and momentum in Hamiltonian mechanics. I think that must have been the “aha” moment for them when they saw it.

And then things happened remarkably fast. Heisenberg and Schrödinger wrote their original papers in late 1924–25, and by 1929 Dirac had already written the first edition of his quantum mechanics book and put it into a systematic form. It is a great book. Of course, he used a mathematical formalism that did not quite make sense according to the mathematics that was known at that time, including his delta function. The equation \int_ {-\infty}^\infty \mathrm{e}^{2\pi ixy}\,\mathrm{d}y = \delta(x) is a perfectly good way of writing the Fourier inversion formula if you interpret it the right way, but things like that drove the mathematicians crazy for a while. And so von Neumann re-did the foundations of quantum mechanics from his own perspective using unbounded operators on Hilbert spaces, but this didn’t have much influence on physicists. Mathematicians are always perplexed when physicists don’t appreciate their work enough, but the physicists didn’t need von Neumann’s theory—they already had Dirac. Of course, a few years later, distributions were invented and then you could turn what Dirac had said into honest mathematics, without too much difficulty.

Could you shed some light on the solutions of the Dirac equation and the role of spinors in clarifying the picture of the wavefunction of the relativistic electron?

GF: The electron has an intrinsic angular momentum of magnitude \hbar/2, and we say it has spin 1/2. To describe this mathematically, you need a wave function that takes values in a space of two-dimensional vectors called Pauli spinors. The orientation of the vectors tells you whether the electron has spin up or down in a given direction. Dirac constructed a different model using four-dimensional spinors (“Dirac spinors”)—that is, he described the electron using a four-component wave function. The point of this was that it enabled him to find a first-order differential operator on these functions whose square is the Laplacian and thus construct his first-order wave equation in which space and time occur on an equal footing. You need four-dimensional spinors to get such an operator, but it doesn’t mean that you are suddenly making the electron twice as complicated. The Dirac equation tells you that two of the four components are determined by the other two, so the spin degree of freedom remains the same.

Dirac realized that there is a problem with his equation because the spectrum of the Dirac operator is not positive. It has a negative spectrum as well as a positive spectrum, and a gap in between. You can’t just throw away the negative part of the spectrum, and therefore you have to account for why you can’t have particles falling down into negative energy states, losing more and more energy until there’s some sort of blow up. So he came up with the idea that the world is filled up with a sea of electrons that can’t be observed but have occupied all of the negative energy states. It was a kind of a crazy idea, but it had enough un-craziness to get over the difficulty for a long enough time to make some progress. Then later you could look at it from a different perspective. But that really required a strong shift in perspective: instead of thinking of the Dirac equation as a quantum equation for one particle, you should think of it as a classical equation for a field. Then you quantize it and get quantum fields that allow for both electrons and positrons. What looks like negative energy states for electrons turns out to be positive energy states for positrons.

But now you have entered into quantum field theory, and when you try to add interactions, the calculations lead to divergent integrals, renormalization problems, and so forth. There weren’t any good solutions to these problems until after World War II, when Feynman and others turned their energies back to doing pure science.

Then there was a big push to develop a mathematically consistent and rigorous quantum field theory. People worked hard for that and proved a lot of theorems and developed axiomatic approaches to quantum field theory. But the real trouble is to come up with non-trivial examples of these axiomatic theories. The theory of free fields, fields that don’t interact with anything, is already non-trivial though not so difficult, but working with interacting fields in this picture turns out to be very hard. And that is still an open problem in four space-time dimensions. There are a few examples in two and three dimensions, but the goal of getting a complete four-dimensional theory is still not achieved. This is basically one of the Millennium Prize Problems.

What is your view on the role of time in different theories of physics?

GF: Even in quantum mechanics, it’s not quite clear, this is a sticky point. You have the uncertainty relation between position and momentum, and that is fine. There is also an uncertainty relationship between time and energy, but that’s not the same thing because time is not an observable in the same way as these other things are. And there is no operator that can satisfy the canonical commutation relation with energy.

But then how does the concept of time work in quantum field theory, where quantum mechanics and special relativity come together?

GF: What it usually means is that sitting there, either in plain sight or under cover, is a representation of the Lorentz group. The state space for a particle should carry a representation of the Lorentz group, or more generally the Poincaré group (where you throw in translations also). You should be able to observe the particle from any inertial frame of reference. And in fact, that was one of Wigner’s nice accomplishments, that he classified enough of the representations of the Poincaré group to get all the physically interesting stuff. He found that they were basically parametrized by mass and spin, so each one of these irreducible representations corresponds to a particle of some mass that’s a nonnegative number, and spin that’s a nonnegative integer or half-integer. That works out very nicely.

You were mentioning the role that Pascual Jordan played in the early work on the mathematical foundations of quantum mechanics. But why didn’t Jordan algebras work out as an effective idea in quantum mechanics?

GF: The Jordan product was suggested as a way of quantizing classical observables that are the product of two other observables, the trouble being that the commutative product in classical mechanics becomes non-commutative in quantum mechanics—but it turned out not to be very useful.

What one has to understand here is the following. You try to make a correspondence between quantum mechanics and classical mechanics, but there is just no magic way to go from classical mechanics to quantum mechanics. It is never going to be perfect, because classical mechanics is a limit of quantum mechanics and not the other way around. You should be able to derive classical mechanics from quantum mechanics if you try hard enough, but going the other way is impossible. There are some points where you just have to get out and do some experiments. You find that this is how the world works and you have to account for it somehow.

Has it ever been the case that an insight from the present day has resulted in people not just revisiting an otherwise well-entrenched discipline, but actually revising our very understanding of a subject?

GF: Sometimes you find unexpected connections. For example, the canonical commutation relations lead you to a Lie group, the Heisenberg group, that is interesting for a lot of other reasons. Really, the first occurrence of the commutation relations was in Jacobi’s lectures on classical mechanics where he introduced the Poisson bracket relations.

The Heisenberg group was there implicitly in the commutation relations, but people didn’t explicitly think about it as a group. Even Marshall Stone and John von Neumann thought of it as two representations of the real line U(t) and V(t) that almost commute, and they did not recognize the implicit group structure. I guess if you had asked them if those things generate a group, they would have said, “Oh yeah, they do,” but they didn’t think of it in that way. It was not until the 1970s, when the term “Heisenberg group” became part of the common vocabulary, that it became clear that the Heisenberg group comes not only from the quantum mechanical situation but also from several complex variables and in several other contexts.

There is just no magic way to go from classical mechanics to quantum mechanics

One of the most interesting examples I know of influence going backward is what happened with gauge field theories in the 1970s and ‘80s. Physicists were interested in Yang–Mills type theories of quantum fields with some gauge invariance. The way it works on the formal level is that you write down non-Abelian field equations—classical equations that produce classical fields—and then you proceed to quantize those fields. From the physicists’ point of view, all they are interested in is the quantized version—that is, a theory that could describe strong interactions, weak interactions, and so forth—because the unquantized version that describes classical fields doesn’t have any meaning in classical physics. Nonetheless, those classical field equations turned out to have all sorts of interesting applications in differential geometry, in the study of 4-manifolds and related things. I think that is a very interesting interaction between mathematics and physics. It’s a mathematical idea that was first proposed in the context of physics, and physicists took it in one direction and mathematicians took it in another direction.

How would you evaluate the success of perturbation theory, and to what extent is it mathematically rigorous?

GF: A lot of what you can do in quantum field theories to get actual physical results is perturbation theory. You start off with free fields, then you turn on the interaction and you pretend that it’s small, which it certainly is not. Then you do the perturbation theory and see what you get and hope that it agrees with experiments. If you take the first few terms and forget about the rest of them, and you get a good answer, then you are happy.

But there are certain places in the arguments where this is not enough. There are certain phenomena that you can’t see if you just look at the first few terms of the perturbation series. This happens with mass renormalization, for example. Suppose you have a particle, moving from some point A to another point B, and it is not really doing anything but just going between them. According to quantum field theory, there is actually a lot of stuff going on in the meantime, virtual particles being emitted and re-absorbed, and this results in mass renormalization. But if you only look at finitely many terms of the perturbation series, you don’t see the mass renormalization.

One thing mathematicians seem to have trouble in understanding is this: When you do perturbation theory for quantum fields, what you get is a formal infinite series. All the terms of this series are difficult to evaluate, but you can do it after a lot of work. Mathematicians worry about whether the series converges or not, but from the physicists’ point of view, that’s not the issue. The issue is, do the first few terms give you a good result? That is a completely separate question, right? If a series converges but very slowly then the first few terms may be totally useless. On the other hand, if the series diverges but it is like asymptotic expansions, then things go along very well as long as you do not go beyond a certain point. If you look at the perturbation theory of quantum electrodynamics, nobody believes that it actually converges. It’s not proved, but there is strong heuristic evidence that it does not. And this doesn’t bother physicists, because the only time these higher order terms come into play is when you’re looking at very high energy phenomena. And then you can’t do quantum electrodynamics all by itself because there are other interactions that come into the picture.

In perturbation theory of quantum fields, the first problem is that the terms are sums of certain definite integrals, and these integrals tend to diverge mostly because things do not decay fast enough at infinity. You have to subtract off infinities in some systematic way (renormalise) to make sense out of it. You can do that. It is hard work, but it was proved quite a long time ago that it is possible to do it up to an arbitrarily high order. More recently, Alain Connes and his students looked at its underlying mathematical structure, interpreting the results in terms of Hopf algebras. It is very interesting stuff that I don’t understand very well. Anyway, now you have this perturbation series and you can make sense out of all the terms; and for getting physically interesting results, that is usually plenty. In order to get agreement with experiments that measure things to extremely high precision—for example, the anomalous magnetic moment of the electron, the hyperfine splitting or Lamb shift, and things like that—you have to add up quite a few of these terms, and it is computationally very difficult, but it works. Now, the real mathematical problem, I think, is that you would accept this infinite series at least to be an asymptotic series—but we have no idea of how to construct the thing it should be asymptotic to.

Feynman diagrams have been very useful for a physicist in calculations. Do we have a rigorous mathematical theory for that?

GF: Feynman diagrams are just a nice way of cataloging and describing the integrals that arise from perturbation theory, and that was one of Feynman’s real contributions. Perturbation theory involves taking the products of field operators expressed in terms of products of creation and annihilation operators, and forming complicated-looking integrals. Feynman drew pictures that indicate intuitively what those integrals represent. What they show is particles coming in and interacting and going out again, but in the middle some of the lines in the diagram represent virtual particles, particles that don’t obey the mass-shell condition and can’t be observed directly. They are really kind of fictional objects, in the sense that it is only the integral that has a clear meaning: it gives you a number that you can check against experiments. But this way of thinking about it makes things conceptually a lot clearer.

The simplest kind of Feynman diagram is a tree without any loops, and those represent real particles doing real things. So, for example, you can write out calculations for what happens when an electron and a positron come together and turn into two photons. You draw the diagram, and it has electron and positron lines coming in here and two photon lines going out there. Those are all real particles, but there’s a little line segment in the middle that is virtual. It is only when you get diagrams involving closed paths, involving loops, that you get divergences.

You have given talks on the history of harmonic analysis. Could you tell us what you have been able to find in the process?

GF: I started roughly from about 1900, or more precisely, 1902, as that was the year of the invention of Lebesgue integration. Before that, there was some good stuff, but it is a whole new story when you have the Lebesgue integral to work with. The first Fourier analysis theorem to come after that, though, did not have to do with Lebesgue integrals. It was Leopold Fejér’s discovery that the Fourier series of a continuous function is Cesàro summable to the function. The first real application of Lebesgue theory is the fundamental result that is now known as the Riesz–Fischer theorem. I went to look up their papers from 1907. (They were independent—Riesz was a little bit earlier and Fischer acknowledged Riesz in his paper.

The theorem that Riesz states and proves is: Suppose you have an orthogonal set of functions in L^2 on an interval, call them p_n. (He does not say “L^2” or “orthogonal set functions” on an interval, but that is the modern terminology.) Then, if you are given a square summable sequence of complex numbers c_n, there is a function f so that the inner product of f with p_n is c_n for all n. And you look at that theorem now, you will say, what was the difficulty, what was the point? Fischer made it explicit that the point is that L^2 is complete. Again, Fischer doesn’t use the word “complete”; what he states is that given a Cauchy sequence in L^2, there is something to which it converges.

Books and Teaching

You have written many textbooks on advanced mathematics. Tell us how you planned these.

GF: My first book was with Kohn on The Neumann Problem for the Cauchy-Riemann Complex. In my last year as a graduate student, Kohn taught a course and I took notes, which we then worked up into this book. The next book was Introduction to Partial Differential Equations, which was originally in the Princeton Mathematical Notes series. Twenty years later, I made it into a real book. When I taught the course, it was a lot of work because I didn’t have any good textbook. There weren’t any books that did things the way I wanted to do them. So I sort of made it up as I went along, and then I said, well, I might as well get something out of it.

Similarly, when I taught the graduate real variable course at Washington the first time, I used Royden, with Rudin as a supplementary reference. They are both good books in their own ways, but I thought someday I would come back and write a book myself. So a few years later when I taught it again, I wrote the first draft of the book as I went along. That is my Real Analysis. Harmonic Analysis in Phase Space is a book I wrote when I was on sabbatical one year. I had been thinking about the ideas for a while and then I finally decided what I wanted to do with them. Part of that work is based on lectures I gave at ISI Bangalore in 1987.

Which one of your books is your favorite?

GF: The one I had the most fun with is A Course in Abstract Harmonic Analysis. The subject matter is neat stuff. For this one, I knew that I wanted to write a book, so I arranged to teach a year-long course in functional analysis. In the first two quarters I did the standard stuff that everybody ought to know, then did the abstract harmonic analysis in the third quarter.

The book Harmonic Analysis in Phase Space has many things related to mathematical physics. Have you ever taught any course on quantum mechanics or quantum field theory?

GF: I taught a course on quantum field theory once, in which the audience was mostly mathematics students except for one physics graduate student and one or two mathematics faculty. When I taught functional analysis, I always incorporated some topics from physics. The ideas in Harmonic Analysis in Phase Space came from mathematical physics, but it is more oriented towards pure Fourier analysis and partial differential equations, particularly pseudodifferential operators.

In India, finishing the syllabus for a course is often regarded as the prime objective, even among teachers at the higher levels. What is your experience with the education system in the US?

GF: It depends on the course. In the lower level courses, you often have to follow a fixed syllabus, especially because these courses are the prerequisites for other things. You want people to know what they are supposed to know. In more advanced courses, usually there is a little more freedom.

The other practical issue is that when there are multiple sections with different instructors, you need to have some sort of coordination between them. When I first came to the University of Washington, the calculus course involved multiple sections and multiple instructors. And there was a syllabus, a piece of paper with a list of things you were supposed to do. But it was not enforced very strictly and instructors were perfectly free to emphasise and de-emphasise various things. The discrepancy between what Prof. A wanted the students to know and what Prof. B wanted them to know was a problem. Now it is much more coordinated. So if you’re willing to go along with the program that is set out, it’s easy because you don’t have to think about it. But if you don’t like it then there is a problem.

Do you think teaching methodologies at the higher level can help mathematicians and physicists communicate better?

GF: I think a really good teacher can do a lot in that way. But the trouble comes from both sides. If you want to come from the mathematics side there is this whole idea of mathematical structure. We are all educated in it and it becomes part of our DNA, and then we apply it to physics. But physicists refer to different conceptual structures that they work with. So that is the real communication problem. I think it is possible to get over the linguistic difficulties, but not the whole point of view of what you bring to the problem and the kind of knowledge and tools that you have. When I try to read the physics literature on quantum field theory, there are things that physicists do that just drive me up the wall, especially when they are willing to perform formal calculations on things that are not really well defined. I am willing to accept formal calculations that are not fully justified, but at least I want to know that the objects they are calculating with are something that really exists.

When it comes to teaching, do you teach courses on analysis mostly?

GF: Pretty much. Yes, I mostly taught analysis, but analysis means everything from first-year calculus and up. Once in a while a little bit of linear algebra.

Is your teaching style inspired by anyone?

GF: I never deliberately copied the methods of anybody but I was probably influenced by Loomis. The reason I say this is that when I came to India in 2008, I spent a couple of weeks at CMI [Chennai Mathematical Institute], and David Mumford was visiting there at the same time. He came to one of my lectures and said that my lecturing style reminded him of Loomis. I took that as a compliment.

Do you have any advice to graduate students and beginning researchers, on choosing their area of research or problems that they could work on?

GF: I am not a good person to answer this question, as I have had only one PhD student. Coming up with a good problem is hard. Some people are very good at it. For example, Stein is good at it and he has had many students. I sort of followed my own path in doing mathematics, and it is not a path that is easy to bring other people along.

Is there any discipline in mathematics that doesn’t strike you as interesting? If so, why?

GF: I can’t say there’s anything I don’t like, but there are some parts of mathematics that I don’t get. There is a large part of algebraic geometry, especially over a field of characteristic p, where I don’t have the intuition to think of it in a geometric way. Large parts of number theory too. For example, the solution of Fermat’s last theorem involves some stuff that I have trouble understanding.

Do you discern differences between your working style and that of other mathematicians?

GF: It’s a question of one’s own personality, one’s own mathematical personality. Mark Kac once made a remark that there are two kinds of genius—there are ordinary geniuses and there are magicians. Ordinary geniuses are people like you and me, except that they are a lot smarter, so they can accomplish more. But when you look at what they did, you say, okay, on a good day I might have been able to do that too. Magicians are the ones that pull great new ideas out of thin air. Stein is an ordinary genius par excellence, and ordinary geniuses make much better thesis advisers than magicians. Of course, I’m not a magician of any kind.

In a research article one often cites many references. Is it always the case that the authors have gone through all those references thoroughly?

GF: Probably not. You cite references for several different reasons. One is that somebody has a specific result that you want to quote. In that case, you should go back and read that part and make sure you believe the statement and proof, so you are not basing your own work on something false. In other cases, you just want to acknowledge that X has done some related work on these problems. In that case, you do not have to spend that much time reading the work.

One can’t read everything—it takes forever—but I do find it interesting to look at old papers. Of course, you may find that old papers are hard to read because they are looking at things in a different way, or their notations are clumsy, but you don’t want to ignore them. I remember that I resisted learning the original proof of the Stone–von Neumann theorem on the uniqueness of the canonical commutation relations, as now you can get it as a corollary of the Mackey imprimitivity theorem. I understood it that way, and for a long time I resisted going back and reading von Neumann’s original paper, mainly because it was in German. I can read German, but it takes some work. But finally I sat down and read it and I was happy that I did, because it was a very nice paper.

You visit India, particularly Bangalore, quite regularly. In fact, there is a TIFR Lecture Notes on Partial Differential Equations arising out of your first visit to the Bangalore Centre of TIFR in the early 1980s. There is also your famous textbook with the same title. Did the TIFR Lecture Notes inspire you to write your textbook?

GF: No. My textbook was based on a course I taught in Seattle in 1975. In its original form it appeared in Princeton’s series of lecture notes in mathematics. The TIFR Lecture Notes have some overlap with it. Then in 1994 I combined the Seattle lecture notes with some parts of the TIFR notes, expanded them, and made them into a real textbook.

What makes it special for you to visit India from time to time?

GF: I think I have on average visited India once in every four years. I always come for some academic purpose, but I also do things as a tourist. And sometimes I visit some NGOs supported by a charitable organization that I belong to in Seattle called “People for Progress in India”. This is an all-volunteer group of people, mostly Indians living around Seattle, who are involved in supporting small-scale development projects to benefit disadvantaged people in India, usually in rural areas. When I visit one of their projects, I get to see village life in a way that tourists almost never do. I enjoy that, as I am interested in all aspects of Indian society and culture.

Thank you, Prof. Folland, for this conversation.

acknowledgement Devendra Tiwari would like to acknowledge Nithyanand Rao for editing this interview.

Footnotes

- G.B. Folland. A Fundamental Solution for a Subelliptic Operator. Bull. Amer. Math. Soc. 79 (1973): 373–376. ↩