What is common between 2, \ 3, \ 7, \ 11 and 13?” This question was posed by Amitabh Bachchan to a contestant during a Children’s Day special episode of the famous “prime”-time show Kaun Banega Crorepati (the Indian version of Who Wants to be a Millionaire). The contestant, Tushit Nikose, a 11-year-old science-lover, beamed in joy even before the options were laid out. “C: All are prime numbers,” he replied promptly and won 3000 points. On further questioning, he defined a prime number as a number which has only two factors, 1 and itself. He also explained what a “factor” means. Bewildered, Mr. Bachchan confessed that he knew nothing about these numbers, eliciting laughter from the children in the audience. A few questions later, when Tushit decided to quit the game after winning Rs. 6,40,000, Mr. Bachchan brought out a smartphone to transfer the amount digitally to Tushit’s account, declaring it “a quick, safe and hassle-free way to transfer money,” perhaps unaware that prime numbers are the fundamental enablers of such safe and quick digital transactions.

A prime number, as Tushit explained, is a number which has only two factors: 1 and itself. Primes serve as building blocks of all the numbers: any natural number greater than 1 is either a prime or can be written uniquely as a product of powers of prime numbers. In the latter case, the number is said to be composite. For example, the composite number 69 can be broken down as 3 \times 23. No other primes can multiply to give us 69. Similarly, 162 = 3^4 \times 2 and no other primes or prime powers would give us 162. This phenomenon is famously known as the Fundamental Theorem of Arithmetic and as this name suggests, prime numbers as well as this theorem are of tremendous importance, not only to the study of mathematics, but also to our lives today. What’s more, these numbers have fascinated seekers of knowledge for over three millennia, and in many different ways.

The study of prime numbers, as indeed the study of anything that interests us, is like undertaking a mountain expedition. Imagine a favourite mountain you would like to climb, say Mount Everest. To prepare for it, you immediately do a Google search and collect the necessary items for the same: Climbing apparatus, appropriate clothing, oxygen canisters, a satellite phone and a GPS (Global Positioning System) device. You then physically prepare and condition yourself for this climb. All this information is available to you at the click of a finger, but was this the case for the first person to attempt the climb? Behind the triumphs that we have today, lie the attempts of several people to scale the heights of Mount Everest. All the attempts before the 1953 expedition of Edmund Hillary and Tenzing Norgay failed (sadly, to the point of no return in some cases), but each quest brought new knowledge to the mountaineering community and contributed to the first successful attempt. Over the years, climbing expeditions have become more feasible not only by repeated experiences, but also by scientific tools like the GPS, which were originally developed for entirely different purposes.

Similarly, today, if an eager student like Tushit wishes to study prime numbers, he will have access to a vast literature of theorems, tools and “equipment” that were developed from scratch over a period of three millennia. He will also use tools and techniques from other areas that his earliest scholar-ancestors had not even envisioned. But, there is a crucial difference between the study of prime numbers, and an expedition to Mount Everest: we are still far from reaching the summit of “Mount Prime Numbers”. There is much that is yet to be discovered about prime numbers. There are many goal posts to reach before one can even contemplate what the summit looks like. At the same time, what we know about primes is also immense, and this knowledge has important applications that modern humanity cannot do without. We attempt to trace some developments that created a path between the earliest study of prime numbers and one such application, namely, safe digital transactions.

Capturing Primes

What did early attempts to understand prime numbers look like? Long ago, around 300 bce, a Greek scholar, Euclid of Alexandria, wrote a series of textbooks called The Elements in which he systematically put forth the conceptual foundations of mathematics through precise definitions, theorems and deductive proofs. Prime numbers find a place in his treatise and he mentions two important properties of primes: that any natural number n>1 can be uniquely factorized into a product of prime powers, and that there are infinitely many primes. In essence, this establishes the primes as building blocks of numbers and also tells us that there are infinitely many bricks available. This motivates the following question: How do we gather these building blocks? There are many aspects to this question:

- If an arbitrary number is placed before us (presumably very large), is there a way to determine the primality of this number?

- If a large collection of numbers is laid out in front of us, is there a way to arrange them and recognize all the primes among them “in one go”?

- Is there a method to generate prime numbers, as large as needed?

One of the earliest non-trivial ideas to answer the first two questions is attributed to the Greek polymath Eratosthenes around 240 bce. By non-trivial, we mean, in this context, an idea which does not require us to check the divisibility of n by every number smaller than it, a ‘brute force’ approach. He observed that any composite number n>1 is bound to have a prime factor less than or equal to \sqrt{n}. Indeed, if this were not true and all the prime factors of n were greater than \sqrt{n}, their product will be greater than n, a contradiction. In order to gather all prime numbers less than or equal to 100,

- We observe that the only primes less than or equal to \sqrt{100} = 10 are 2, 3, 5 and 7.

- We write down the numbers between 2 and 100 and cross out all multiples of 2, followed by all multiples of 3, then multiples of 5 and finally, multiples of 7. This is like putting the numbers through a sieve; all multiples of 2, 3, 5 or 7 make it through the holes.

- The numbers left behind in this “sieve” are all the primes between 2 and 100.

- Equivalently, a number n is prime if and only if it is not divisible by any prime number between 2 and \sqrt{n}.

This is definitely an improvement over the “trivial” method; for instance, it reduces the number of steps required to check the primality of a number from some number approximately equal to n, down to something smaller than \sqrt {n}. But, for large values of n, (large enough for real-world purposes, that is), this method too becomes infeasible. For example, even with our current computational machinery, if n has 200 digits, by the time our current computers determine primality using the sieve of Eratosthenes, this planet may not even be around!

However, Eratosthenes highlighted a new way to view primes: numbers which are “not fine enough” to escape through a sieve. It is, therefore, natural to think about nicer sieves which can capture primes more efficiently. This viewpoint has spanned a variety of new-generation sieves to address several questions. For example,

- Is there an efficient way to write down the factorization of large numbers into prime powers?

- Are there infinitely many primes p such that p+2 is prime?

- Can any (sufficiently large) integer n be written as a sum of two primes?

Each of the above questions has been investigated in detail using sieves and other methods, and has created a solid body of work which takes the study of primes in many different directions. But, let us for a moment, go back to early studies of primes.

Prime Tables: “Big data” Before the Advent of Computers

Early attempts (and by this, we mean the era before the mid-17th century) to record prime numbers were made for specific purposes. For instance, if a polynomial

x^n + a_1x^{n-1} + a_2x^{n-2} + \cdots + a_{n-1}x + a_nis equal to the product

(x - \gamma_1) (x - \gamma_2)\cdots (x - \gamma_n)of linear factors, then, we have,

a_n = \pm \gamma_1\gamma_2\cdots \gamma_n.Thus, it was considered useful to have readily available lists of factorizations of numbers whenever confronted with the problem of solving equations of higher degrees in integers (another important theme in number theory). Another purpose that such lists served was to tabulate perfect numbers, that is, numbers that are equal to the sum of their proper divisors. For example, the number 6 has proper divisors 1, 2 and 3; and 6 = 1 + 2 + 3. Some of the other perfect numbers are 28 and 496. Such lists of primes and factorizations of numbers had limited scope.

The English mathematician John Pell (1611–1685) is considered one of the earliest serious “curators” of primes [3]. Unfortunately, most of us hear his name in connection with the falsely titled “Pell’s equation” x^2 - Ny^2 = 1, where N represents a square-free number. Indeed, this equation was studied by the ancient Indian scholar Brahmagupta in the 7th century ce. His method to generate infinitely many solutions of this equation is named “bhāvanā”, and even inspires the name of this very magazine.

Nonetheless, John Pell has made important contributions to mathematics and one of them is a sincere attempt to create systematic records of primes. In his own words, the project to create prime tables and factor tables was part of a “mathematical-philosophical analogue of the book catalogue.” Under his instructions, a record of prime numbers up to 100,000 was created in 1668 by Thomas Brancker, a contemporary mathematician. Thus started the search for “big data” in primes. Pell’s table was to remain mostly inaccessible outside of England, for a hundred more years.

In the meanwhile, the Germans prepared their own prime and factor tables. Carl Friedrich Hindenburg, a German mathematician, independently discovered the sieve of Eratosthenes and aimed at creating prime tables up to 5 million in the 1760s. He also used physical objects like adjustable sliders and stencils to locate and eliminate multiples of primes. We refer the interested reader to a detailed article [7] by Martin Weissman, which among other things, describes how primes were tabulated before the advent of computers.

And so it was that in the year 1792, a young teenager by the name of Johann Carl Friedrich Gauss found himself in the possession of prime numbers up to 3 million. Gauss would go on to make many notable contributions to mathematics and physics that earned him the title “Princeps Mathematicorum” (Prince of Mathematics). By the time he turned 21, he had written the voluminous treatise Disquisitiones Arithmeticae, which did for number theory what Euclid’s Elements did for mathematics in 300 bce, in that it systematically arranged and presented the conceptual foundations of number theory as a discipline in itself.

Coming back to the table of primes, Gauss analysed this data in batches and made pertinent observations which proved to be a game-changer in the study of prime numbers. His fundamental conjecture (which was later known as the Prime Number Theorem and was proved in 1896) states that as x takes increasingly larger values, the prime counting function \pi(x), defined as the number of primes p less than or equal to x, comes closer and closer to the value of

\frac{x}{\log x}.In fact, as x grows to very large values, the relative difference between \pi(x) and x/\log x approaches 0. This conjecture of Gauss gave birth to the discipline of analytic number theory, a field that brings in sophisticated tools from real and complex analysis to interpret and solve elementary-looking problems in number theory.

Primes: Not Elementary, My Dear Watson

In mathematics, the word “elementary” does not necessarily refer to the level of difficulty of a problem. Instead, it often indicates that the problem can be easily explained to an interested primary school student. Sometimes, it takes centuries of serious thought to discover any new insight on the problem. This could include an interpretation of the problem in a new language which is not that elementary after all. Nonetheless, the new interpretation opens up the problem to strong techniques from other areas.

The conjecture of Gauss is one such problem. The latter half of the 19th century saw many simultaneous developments which fundamentally changed how this conjecture was addressed. These developments brought tools from calculus into the picture, tools that were sharpened and strengthened until the first complete proof of the prime number theorem was written.

A major step in this direction was taken by the Russian mathematician Pafnuty Chebyshev in 1848. Chebyshev viewed the prime-counting function as a summatory function. That is, let us define a function f(n), which picks up the value 1 if n is a prime and 0, otherwise. From this point of view, \pi(x) is seen as the sum

\sum_{n \leq x} f(n),that is,

f(1) + f(2) + f(3)+ \cdots + f(n) + \cdots,which terminates at the largest positive integer up to x.

Chebyshev focused on two functions which record logarithms of prime numbers. For a natural number n, his first function picks up \log \,n if n is a prime and 0, otherwise. The second function, often called the Lambda function and denoted as \Lambda(n) in the literature, is a little more subtle. This function recognizes not just primes, but all numbers which are powers of a single prime. That is, the function \Lambda(n) picks up the value \log p if n is of the form p^k for a prime number p and 0, otherwise. As such, the Lambda function does not discriminate between a prime number and its powers, say, 3, 9, 27, 81 and so on, uniformly assigning the value \log 3 to all of them.

Chebyshev replaced the “elementary” prime counting function by the more sophisticated function

Furthermore, using readily available calculus tools that were developed by Leonhard Euler and Colin Maclaurin more than a century ago, Chebyshev showed that the conjecture of Gauss is equivalent to proving that for increasingly large values of x, his sophisticated prime-counting function \psi(x) comes closer and closer to x.

This development gave a much needed fillip to the study of prime numbers. It changed prime counting from an elementary to an analytic problem, and henceforth, questions about prime numbers were studied with the help of Chebyshev’s function \psi(x). In fact, the formidable tools of calculus provide a concise dictionary between “elementary” questions about prime numbers and “not-so-elementary” questions about \psi(x).

Eleven years after Chebyshev’s inputs, another stimulus to the study of prime numbers was provided through the theory of complex functions. In 1859, Bernhard Riemann wrote a nine-page article, “On the number of primes less than a given magnitude” [5], in which he focused on a complex-valued function called the Riemann zeta function. At this point, it would be helpful to go back a little in time and recall an important observation made by Euler more than a century before Riemann.

Euler took a real number s > 1 and studied the infinite series

1 + \frac{1}{2^s} + \frac{1}{3^s} + \cdots + \frac{1}{n^s} +\cdots.Among the earliest theorems that we learn in college calculus is the fact that though this sum appears to go on and on, it is, in fact, a finite value (calculus assigns a precise meaning to the notion of a sum of infinitely many numbers). This value defines the zeta function and is denoted as

\zeta(s) = \sum_{n=1}^{\infty}\frac{1}{n^s}for each s > 1. Euler used the fundamental theorem of arithmetic (the assertion that each natural number n>1 can be written uniquely as a product of powers of primes) to show that \zeta(s) can be written as an “infinite” product of certain series that come from primes. Let us consider, for a prime p, the geometric series

E_p(s) = 1 + \frac{1}{p^s} + \frac{1}{p^{2s}} + \frac{1}{p^{3s}} + \cdots + \frac{1}{p^{ks}} + \cdots.This series also converges to a finite value. If we now take E_p(s) for each prime p and “multiply” them all together (the product of an infinite number of values has a precise meaning in calculus, just as the sum of an infinite number of values does), the fundamental theorem of arithmetic tells us that \zeta(s) is in fact the product of all the terms E_p(s). This is an important equality that links the zeta function to the study of prime numbers.

Riemann’s fundamental insight was to define the zeta function \zeta(s) for the larger domain of complex numbers s (as opposed to Euler’s zeta function for real numbers s >1). As it turns out, the zeta function can be defined for all complex numbers other than s=1.

Riemann carefully worked out some complex-analytic properties of the zeta function, which is now named after him. More importantly, he indicated how the prime number theorem can be viewed in terms of the roots of the zeta function on the complex plane. By roots, we refer to those complex numbers s where \zeta(s) is zero. Building up on Riemann’s ideas, about 40 years later, two French mathematicians, Charles de la Vallée Poussin and Jacques Hadamard independently provided complete proofs of the prime number theorem.

In his 1859 article, Riemann also makes a deep conjecture about the exact location of the zeroes of this function on the complex plane. The conjecture, called the Riemann hypothesis, remains unproven till date. In fact, it finds a place in the hallowed list of Millennium Prize Problems announced by the Clay Mathematics Institute that promises an award of 1 million US dollars for a correct resolution of each problem. In terms of the prime counting function, the Riemann hypothesis predicts a rather sharp rate of convergence between \pi(x) and x/\log x.

The work of Riemann has influenced much of number theory today, two predominant themes being, firstly, the search for a complete proof of the Riemann hypothesis and secondly, a template to encode several “elementary” arithmetic questions in the language of a wider class of zeta-like functions.

Roughly speaking, the period between 1792 and 1896 saw (what looked like) an elementary problem transition into a problem in real analysis and then, into a problem in complex analysis. These developments and early approaches to the Riemann hypothesis are carefully documented by H.M. Edwards in his well-known 1974 classic Riemann’s Zeta Function [4].

Let us, for a moment, get back to basics and watch the prime number theorem in action. What would you do if you needed a prime number, a really large one, say, a number with 200 digits? We will see in Section 5 what large primes can do for us. One would start by listing out all 200-digit numbers and start checking if each is prime. But, in this mechanical process, we do not have any insight about how long it would take us to hit the first prime. We consider the set of numbers

\{1,\,2,\,… ,\,N\},\, N = 10^{200}.The prime number theorem tells us that for a number n in this set, the probability that n is prime is close to

\frac{N/\log N}{N} = \frac{1}{\log N} = \frac{1}{200 \log 10} \approx \frac{1}{460}.By basic probability theory, this means that if we perform an experiment of choosing a random number from this set and testing whether it is a prime, on average, we should be able to find a prime number within 460 experiments. The number of experiments can be further reduced with some additional considerations, for example, by avoiding numbers with last digits 2, 4, 5, 6 or 8. This shifts our focus to a computational perspective: does it take too long to determine primality of a large number?

Primality Testing: A Little Theorem to Test Big Primes

In the mid-17th century, as the Germans were struggling to create prime tables (either by trial division, or via methods similar to the sieve of Eratosthenes), some parallel developments were taking place in France. In the year 1640, a young French lawyer, Pierre de Fermat, who spent his spare time pursuing mathematics, made some discoveries about prime numbers which have significant “real-world” consequences, especially with respect to primality testing.

Fermat’s observations can be best expressed with the help of a circular form of counting. Imagine walking on the hour points of a clock. You may walk millions of steps and yet, you will find yourself on one of the 12 points. In effect, therefore, the “net” result of your walk is between 0 and 11. This is an example of a circular form of counting called counting modulo 12. One can, of course, replace 12 with any number N and count modulo N. In this sense, each time we walk over N points on an N-hour clock, we find ourselves at the same point where we started. That is, any multiple of N steps amounts to a walk with zero change.

In October 1640, Fermat made an observation in a letter to a friend.

Let p be a prime number. Let us take a number A of our choice such that A is not divisible by p. If we walk A^{p-1} steps on a p-hour clock, we will find ourselves at the same point as if we had merely walked 1 step. For example, on a 13-hour clock, whether you walk 1 step or 11^{13-1} steps from the same starting point, you will find yourself at the same endpoint. In mathematical language, if the greatest common divisor of A and p is 1, then

\[\begin{equation*}

A^{p-1} \equiv 1 \, \pmod p.

\end{equation*}\]

Equivalently, p divides A^{p-1} - 1. Such equations are called congruence equations.

The theorem above is famously known as Fermat’s little theorem, even though it was proved almost a hundred years later by Leonhard Euler. It gives us an alternative method to check non-primality of a number.

Fermat’s “non-primality” test. Suppose, there is an A such that

- 1 \leq A \leq n-1,

- the greatest common divisor of A and n is 1, that is, A does not have any common prime factor with n,

and - A^{n-1} \not\equiv 1 \, \pmod n.

Then n is not prime. Thus, what Fermat’s method does is to produce “witness(es)” for the non-primality of n.

We note that the number of operations to be performed here could be as high as n-1, which is the number of steps in the trivial trial division method. So, at first glance, this method seems as inefficient as trial division. However, it encourages us to think about the primality of a number in new ways, that is, in terms of appropriate congruence equations. Just as the trial division method was refined by Eratosthenes and other sophisticated sieves grew out of his sieve, the method arising out of Fermat’s little theorem can be further modified. Indeed, variants of the method described above give us what are called “probabilistic” methods to check the primality of a number, as opposed to “deterministic” methods. Deterministic methods of primality testing return a definite (and correct) yes or no at the end of a finite number of steps. On the other hand, probabilistic methods tell us that n is probably prime, and this probability increases with each step that does not return a “no”. In the context of the Fermat test, with every A as above that returns A^{n-1} \equiv 1 \, \pmod n, it becomes more probable that n is prime.

Since the advent of computer technology, the requirement for large primes has increased (we will describe an application of large primes in the next section). Therefore, we are always in search of methods that test the primality of large numbers in an efficient manner. As we indicated in the previous section, a combination of the prime number theorem and an efficient primality test will help us find large primes in reasonable time.

Fermat’s primality test serves as a seed for several other probabilistic methods for testing primality. One such test, obtained by mathematician G.L. Miller in 1976 and modified by M.O. Rabin in 1980, has found feasible applications in cryptography and e-commerce. While details of this method will take us beyond the scope of the article, it would be opportune here to mention that the running time of the Miller–Rabin test reduces considerably if one assumes a “general” Riemann hypothesis. Primality testing methods create a beautiful amalgamation of the “theoretical” and the “practical”. We wonder if Fermat and Riemann had any inkling whatsoever of the important applications that their work would lead to!

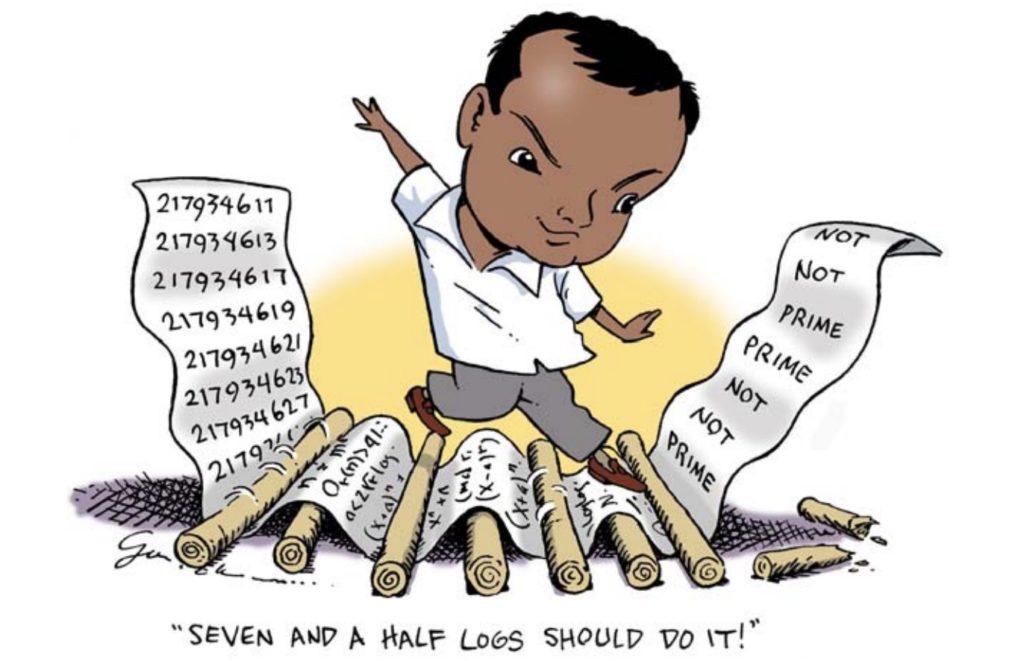

Prime Time News from IIT Kanpur

The beginning of the 21st century witnessed a major development from India in primality testing. Some time in August 2002, Manindra Agrawal, Neeraj Kayal and Nitin Saxena from the Department of Computer Science and Engineering at IIT Kanpur announced an article named “PRIMES is in P” [1]. This work came out of an undergraduate research project undertaken by Kayal and Saxena under the supervision of Agrawal. Within a matter of hours, their paper generated a lot of excitement and was widely circulated. They had obtained the very first deterministic and unconditional polynomial time algorithm for primality testing. That is, their primality testing algorithm

(a) certifies whether a number n is prime in “polynomial time”,

(b) returns a definite (and correct) yes or no after a finite number of steps,

(c) does not assume any as yet unproven conjectures (like the Riemann hypothesis).

Basically, it checks all the boxes for a good algorithm.

(Right) NeerajKayal NeerajKayal

Let us understand the word “polynomial time”. From the point of view of computer science, any method which requires the number of steps to be a positive power of n, however small that power is (even as small as 1/10000000, for example), will become inefficient as n grows. On the other hand, \log n grows very slowly in comparison with n^a for any a, however small. For example, let us take a large number

n = 10^{10^{14}}.We see that

n^{1/10000000} = 10^{10^{7}}.On the other hand,

\log n = 10^{14}\log 10,which is substantially smaller than n^{1/10000000}.

Thus, if the number of steps taken by a method is some power of \log n, the feasibility window of this method increases by a huge margin. That is, the method will work in reasonable time for much higher values of n than if the number of steps were close to a positive power of n. A polynomial-time algorithm, therefore, is an algorithm in which the number of steps is about (\log n)^c, where c is a positive, absolute constant. To say that “PRIMES is in P” is to say that the primality of n can be checked in approximately (\log n)^c steps.

The algorithm of Agrawal, Kayal and Saxena is built on simple principles; it uses a generalization of Fermat’s little theorem to polynomials over finite fields that had not been considered for algorithmic purposes before. A very deep and interesting problem was solved by them in an undergraduate project through a relatively elementary approach, and this work is now counted among the most important mathematical discoveries in recent times.

For further reading on the developments in primality testing leading to the work of Agrawal, Kayal and Saxena, we refer the reader to an exposition by F. Bornemann [2].

Cryptography: When Big Primes Keep Big Secrets

So far, the study of prime numbers tells us three important facts about prime numbers from a computational point of view. First, it is “easy” to generate large potential primes. Second, it is “easy” to decide whether a large number is indeed prime. Third, it is “difficult” for a computer to factor a large number. By “easy”, we mean that the work can be accomplished by a computer in an eminently reasonable time. By “difficult”, we mean the opposite: even with all the computational power we have now, accomplishing a “difficult” task would take the kind of time that we don’t have in our lifetimes, for example, a thousand years!

These three facts combine together to give us a safe and quick method to exchange sensitive information on online digital platforms between valid parties without being intercepted by an unauthorised third party. Fermat’s little theorem, again, plays a crucial role in this method. Let us describe a slightly more general variant of this theorem, which was proved by Euler in the 1730s.

Fermat-Euler theorem. Let N be a natural number and A be an integer such that A and N are coprime, that is, the greatest common divisor of A and N is 1. Let \phi(N) denote the number of integers lying between 1 and N which are coprime to N. Then,

A^{\phi(N)} \equiv 1\, \pmod N.Thus,

A^{\phi(N) + 1} \equiv A \, \pmod N.Around 240 years later, in 1978, Ron Rivest, Adi Shamir and Leonard Adleman, three computer scientists at the Massachusetts Institute of Technology in the USA, exploited the Fermat–Euler theorem and developed a method to share information between two parties safely. Typically, when a message is sent from a sender to a receiver, the sender has to disguise or “encrypt” the message and the receiver has to decipher or “decrypt” it. The method (or key) for the encryption and decryption is discussed privately between these parties or is shared between them through other safe sources. The RSA method (named after its creators) is set up in such a way that the key to encrypt a message is made public. While anyone can encrypt a message, sign it and send it across, only the intended receiver has the knowledge to decrypt a message and deduce whether it comes from a genuine sender. Such a system is called a public key cryptosystem.

The RSA method is easy to understand and we attempt to present it below in a simple form.

- Instead of a prime p, an online merchant takes a semi-prime number N, which is a product of two primes p and q, roughly of equal size. The reader can quickly check that for this chosen N, \phi(N) equals (p-1)(q-1). Thus, the Fermat–Euler theorem tells us that for any number A,

A^{(p-1)(q-1)+ 1} \equiv A \, \pmod{pq}.

The merchant then chooses two numbers E and D, so that (p-1)(q-1)+ 1 = ED. The merchant makes N and E public and keeps D private.

- You, the buyer, will typically take your credit card number A (which will be smaller than N) and encrypt it as the net value of A^E \, \pmod N. You don’t do it yourself, of course. You enter the number and the website does it for you since the encryption key E is public.

- The number D is not public. It is known privately to the merchant. On receiving the encrypted message A^E \, \pmod N, the merchant raises it again to the power D and calculates A^{ED} \,\pmod N. By basic congruence arithmetic and by the Fermat–Euler theorem,

\[\begin{equation*}

\begin{split}

(A^E)^D &\equiv A^{ED} \equiv A^{(p-1)(q-1)+1} \, \pmod N\\

&\equiv A \, \pmod N.

\end{split}

\end{equation*}\]This gives back the number A to the merchant. The credit card number (or intended message) therefore gets decrypted back to A.

But, you may ask: if N and E are public, can D really stay secret? The answer is an emphatic yes.

As mentioned above, the safety of the RSA algorithm lies in the fact that it is very, very difficult to break down or to factorise a large number N into its prime factors p and q. Thus, even if the “encryption” key E is public, to find out D, an unscrupulous third party will have to know the value of \phi(N) = (p-1)(q-1) and this is not possible unless one is able to factor N into its constituent primes p and q. This makes it practically infeasible to find out the decryption key D (which only the intended receiver knows) within a reasonable time.

In their 1978 paper [6] on the RSA algorithm, the authors estimated that the computing resources of those days could take, for example, 74 years to factor a number with 100 decimal digits and 3800000000 years to factor a number with 200 decimal digits! Today, we have more sophisticated computing resources and methods to factor numbers of this size. These methods originate from abstract ideas in mathematics like the number field sieve and elliptic curves. But, we now use even larger numbers for encryption that still have unfeasible factoring times with current technology.

A lot of research has gone into factoring large numbers. For example, between 1991 and 2007, the RSA Laboratories, a company formed by Rivest, Shamir and Adleman, ran the factoring challenge. They listed out semi-primes of various sizes with cash prizes for those who could factor them. One of the numbers in their list, RSA-768, a 232-digit number of bit-length 768 was factorised as late as December 2009 after two years of work on several computers. Numbers of higher sizes on this list still remain unfactored.

The fact that it takes much longer to factorise numbers than to generate primes and multiply them keeps our information safe.

In the show Kaun Banega Crorepati (KBC) that we mentioned at the beginning, a variant of the RSA method takes place in each episode when Mr. Bachchan logs into KBC’s bank account and transfers money into the account of the contestant. In fact, the interested reader can go to secure websites (for example the website of their bank or even the bank that Mr. Bachchan advertises in his programme!) and check their digital certificates. These clearly state the encryption algorithm (most likely, the RSA algorithm), the public key as well as the key sizes. The certificate also mentions its expiry dates: this is to ensure that the keys are updated before an unscrupulous party gets hold of an existing private key.

When Fermat sat down to write a letter mentioning the “little” theorem, he was purely driven by the joy behind his discovery. Perhaps, he hoped to trade insights with his correspondent and further consolidate his understanding. Over the course of the next four centuries, people continued to ponder over the “little” theorem and many such forays yielded a fresh perspective into prime number theory. Their motivation was just that; to gain a better understanding of the subject of study. The application to the RSA algorithm and to primality testing methods was a byproduct more than 350 years down the line. Fermat’s theorem, therefore, is a striking example of the bridge between a study of mathematics for its own sake and potential applications of paramount importance to the world. This example encourages us to pursue with honesty and perseverance whatever we find beautiful in mathematics. A lack of immediate “applicability” should not hold back our study; the pursuit of knowledge is full of pleasant surprises, and who knows what the insights obtained in the present could mean to those who come after us. In particular, we must continue, unhindered and unapologetically, our ascent to “Mount Prime Numbers”.\blacksquare

References

- [1] M. Agrawal, N. Kayal and N. Saxena. 2004. “PRIMES is in P”. Ann. Math. 160(2): 781–793

- [2] F. Bornemann. 2003. “PRIMES is in P: A Breakthrough for “Everyman””. Notices of the AMS. 50(5): 545–552

- [3] M. Bullynck. 2010. “Factor Tables 1657–1817, with Notes on the Birth of Number Theory”. Revue d’histoire des mathématiques. 16(2): 133–216

- [4] H.M. Edwards, Riemann’s Zeta Function. Reprint of the 1974 original [Academic Press, New York], Dover Publications, Inc., Mineola, NY, 2001

- [5] B. Riemann, Ueber die Anzahl der Primzahlen unter einer gegebenen Grösse, Bernard Riemann’s gesammelte mathematische Werke und wissenschaftliche Nachlass, zweite Auflage, pp. 145–155, Teubner, Leipzig, 1892; reprinted, Dover, New York, 1953

- [6] R.L. Rivest, A. Shamir and L. Adleman. 1978. “A Method for Obtaining Digital Signatures and Public-Key Cryptosystems”. Comm. ACM. 21(2): 120–126

- [7] M.H. Weissman. “Why Prime Numbers Still Fascinate Mathematicians, 2,300 Years Later”. The Conversation, April 2, 2018, https://theconversation.com/why-prime-numbers-still-fascinate-mathematicians-2-300-years-later-92484.