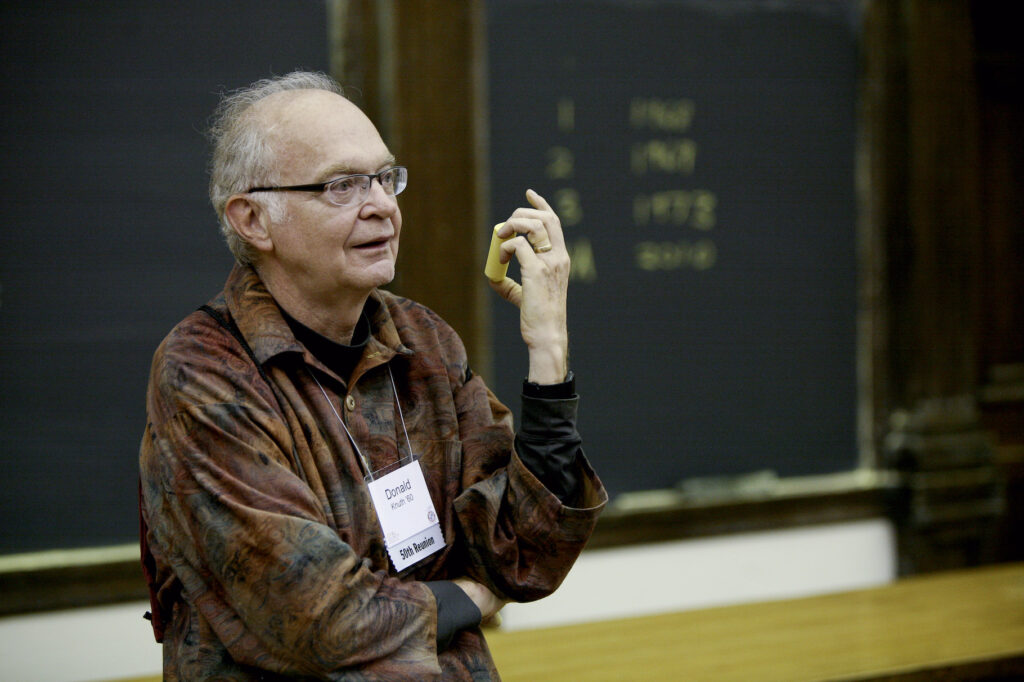

Donald Knuth is Professor Emeritus of The Art of Computer Programming at Stanford University. Hidden inside the very title is the key to the philosophy underlying Knuth’s approach to computing–that it takes a delicate blend of artistry and analytical reasoning to create works that stand the test of time. Be it his bestselling volumes of The Art of Computer Programming (TAOCP) or his founding contributions to “The Analysis of Algorithms”, he has frequently broken new ground; many such works are now considered the ideological bedrock upon which newer structures have been built. Indranath Sengupta and Sudhir Rao engage him in a wide-ranging conversation, starting from his earliest days to the present. The conversation is preceded by an introductory biographical sketch of our protagonist by Sudhir Rao.

Biographical Sketch

Admiring a vast collection of sculptures displayed in Frogner Park, Norway’s largest tourist attraction located in its capital Oslo, Donald Ervin Knuth finds himself in a deeply reflective mood. He is in a section of the park dedicated to celebrating the life and work of the artist Gustav Vigeland, all of it tastefully laid out over 80 sprawling acres, right in the park’s centre. Ruminating on his life and art, he instinctively knows how deeply satisfying it must have been for Vigeland to bring a life-long pursuit to a fulfilling closure. An artist could not possibly ask for more, he mutters to himself.

Knuth’s thoughts on Vigeland provide us with a peek into a creative mind, diligently understanding and appreciating the work of another. Knuth’s own contributions to the world of computing too, have similarly been hailed by experts as seminal and path-breaking, and have stood the stringent test of time. While most others would be happy to lay claim to just a fraction of the body of work that Knuth has produced over the past six decades, it must be stressed that Donald Knuth has always operated many levels above ordinariness.

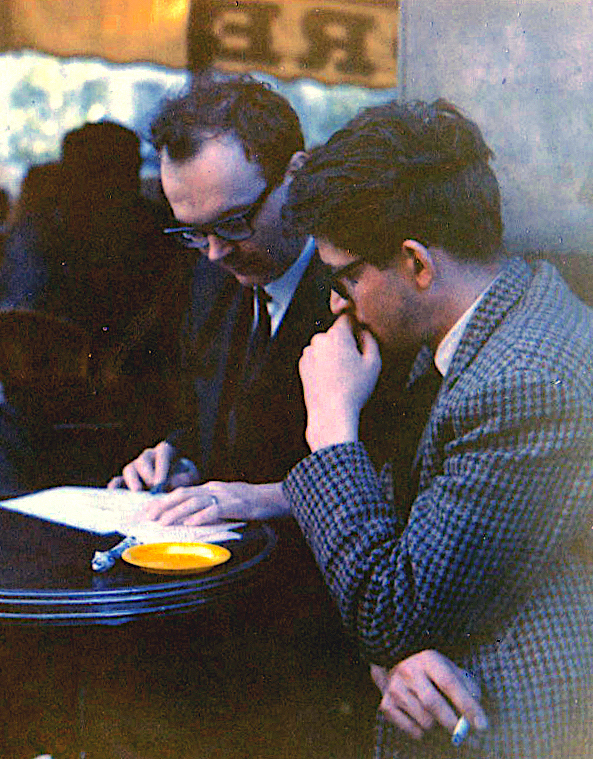

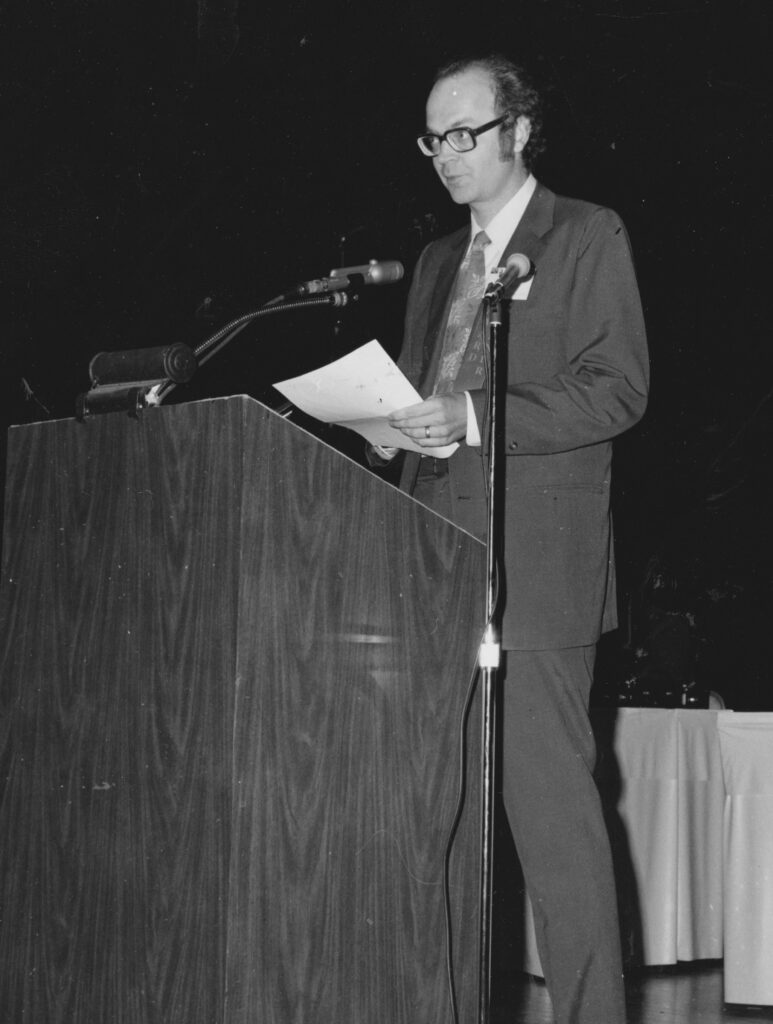

Credited with pioneering contributions such as how software must be conceptualized, written, compiled, and finally processed, his work has impacted the entire lifecycle of modern-day programming. The artist inside him has even ensured that the final output of such programs is pleasingly displayed via aesthetically designed typographic fonts, themselves omnipresent today. Further, by marrying what he affectionately calls the Art of Computer Programming with the rigour of mathematics, he has engendered a flourishing subject known as The Analysis of Algorithms, thereby impacting the theoretical core of computer science. This latter achievement is no less path-breaking for theoretical computer science than the invention of the microscope for life sciences, the telescope for astronomy, or the endoscope for medical sciences. Through rigorous analysis and penetrating insights, Knuth has peered deeply and incisively into what before him were mostly opaque interiors of a subject that had just then arrived; and in doing so, ended up profoundly impacting the twin landscapes of scientific computation, and digital technology. His invited address to the prestigious International Congress of Mathematicians (ICM) held in Nice, France, in 1970 was presciently titled The Analysis of Algorithms. Barely 32 then, Knuth’s work was already earning recognition in the highest academic forums, and the invitation to speak at Nice was confirmation that, while his chosen area of work was the still-nascent subject of Computer Science and its applications, his insights were already sufficiently rigorous and interesting for the exacting world of mathematics to sit up and take notice.

In the five decades since Knuth’s ICM 1970 address, the indispensability of rigour in computer science is now well established. Arieh Iserles at the Department of Applied Mathematics and Theoretical Physics, University of Cambridge, had this to say while reviewing Knuth’s The Art Of Computer Programming–Vol 4 in the June 2007 issue of SIAM Review:

We all take for granted this universal effectiveness of mathematics. Yet it is important to pause once in a while and ask ourselves what exactly renders mathematics into such a universal tool. The answer obviously is rigour. It is perhaps not surprising that computer scientists are the major beneficiaries of mathematical rigour: unlike protein-based life forms, computers will not settle for less.

Knuth, incidentally, had himself expressed similar sentiments a full three decades earlier, in December 1974, in an article published in The Communications of the ACM:

It is certainly desirable to make computer programming a science, and we have indeed come a long way in the last fifteen years. Fifteen years ago computer programming was so badly understood that hardly anyone even thought about proving programs correct; we just fiddled with a program until we `knew’ it worked. At that time we didn’t even know how to express the concept that a program was correct, in any rigorous way. It is only in recent years that we have been learning about the processes of abstraction by which programs are written and understood; and this new knowledge about programming is currently producing great payoffs in practice. The point is that when we write programs today, we know that we could in principle construct formal proofs of their correctness. This scientific basis is resulting in programs that are significantly more reliable than those we wrote in former days, when intuition was the only basis of correctness.

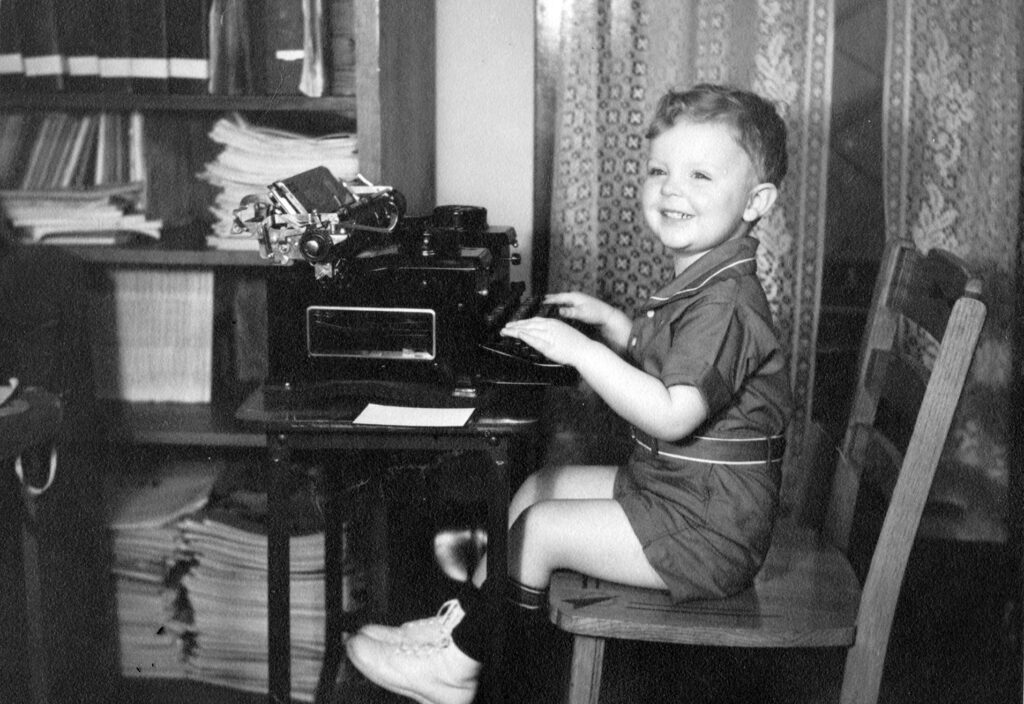

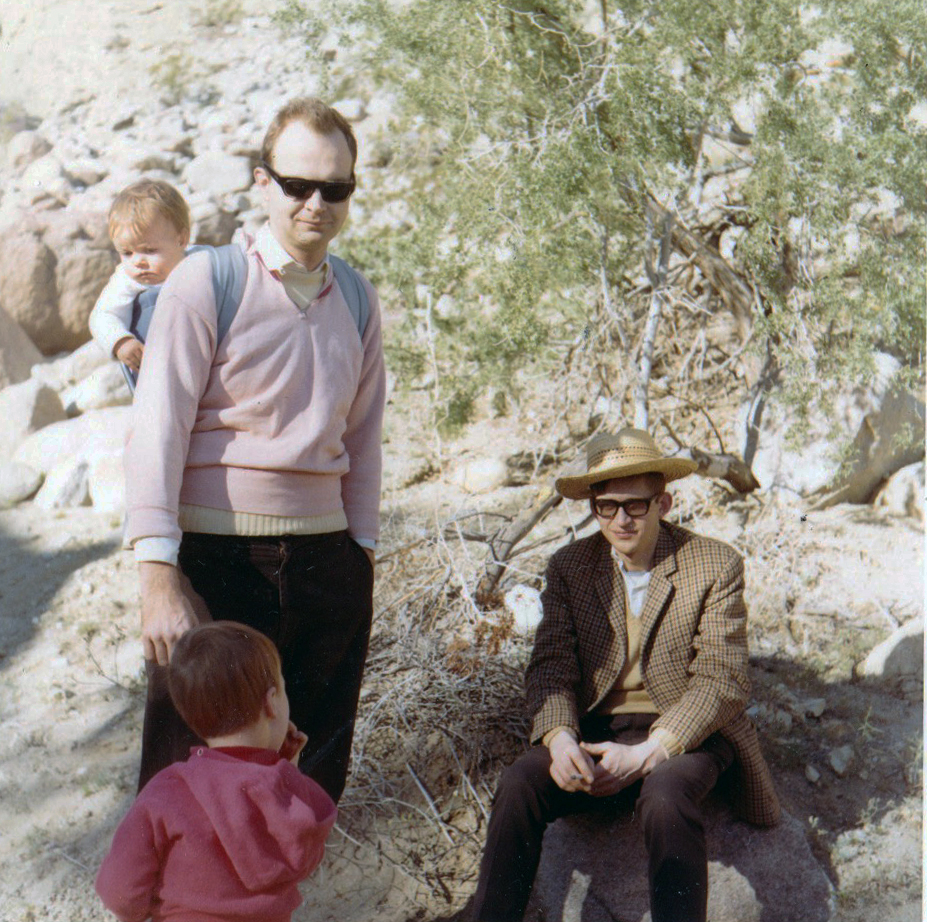

The circumstances that transported Knuth from cold, bucolic Wisconsin to the sunny and vibrant campuses of California where computing would take some of its greatest leaps, make for a fascinating story to both newcomers and cognoscenti alike. Donald Ervin Knuth was born on 10 January 1938 in Milwaukee, Wisconsin to a Lutheran couple, both deeply steeped in their neighbourhood community, faith, and music. The first of two children, Knuth gleefully inherited and soaked in all three parental traits—namely, strong fellowship and bonding with members of a chosen community, a lifelong kinship with the church, and an abiding love affair with classical instrumental music: the piano, and the organ, to be sure. A curious kid, Knuth took to reading quite early. Not greatly into sports, books offered him a grand distraction. He recalls an incident from 1943, that happened inside a large public library, some distance away from his Milwaukee home. Unescorted by his parents that particular day, and riding a streetcar alone, Knuth reached the library only to lose himself completely amidst books, all curled up with a book in a cosy corner. Inadvertently, the librarian promptly locked the library after working hours, with the five-year-old Knuth still holed up inside, both parties totally unaware of the other. A frantic search ended pleasantly though, with Knuth’s parents luckily managing to locate him, but not without a big fright!

Curious and studious, he participated in competitions aimed at middle and high school kids, and even won some of them. These victories, and the efforts put in to master a whole lot of the required extra-curricular material, prepared him mentally for the journey ahead. Case Institute of Technology, as it was called then,1 was highly recommended by his family members, and Knuth started his undergraduate education there. The years he spent on the Case campus turned out to be hugely beneficial, spurring him on to pursue mathematics and computer science, twin interests that were both vigorously vying for primacy in his mind. On the Case campus, serious sporting activity was anyway ruled out, owing to his general disinterest in sports. Yet, he was intrigued by sports in a purely academic way. Closely following Case’s basketball team’s progress in the inter-university league while working as its manager, Knuth got hooked to an interesting problem involving the performance prediction of the team, and its individual players. Knuth dived headlong into the nitty gritty, realizing that people up to that time had been considering only the points that players had scored, not the other things that basketball players do. He noticed that ball possession was an important element that never got into the statistics; neither did the number of fumbles, steals, rebounds, and missed shots. In those days, the scorekeeper noted how many fouls a player had made, but only because nobody could continue in the game after making five fouls. So Knuth decided to count many more things that had ever before been counted. After each game, he would then go over to the computing centre and punch cards, so that he could feed those newfangled statistics into the campus computer. The computer would then plug them into a formula he had devised to estimate each player’s real contribution to the game. Coach Phil Heim agreed that these new numbers were “spot on”, and he urged the players to improve their computed scores instead of simply getting the most balls in the basket. Nobody had previously pointed out that a player doesn’t really make two points when he scores, because he also loses possession of the ball. The result: Case ended up topping the league that year.2 This achievement created such a buzz that it was featured on national TV and in the well-known, internationally circulated magazine Newsweek.3

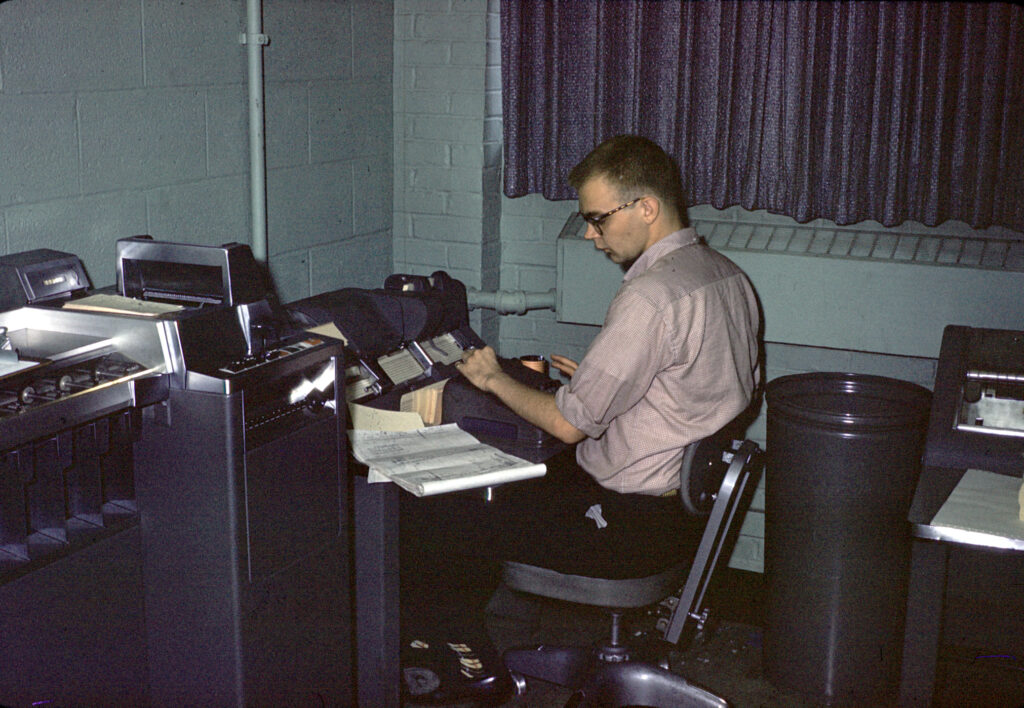

There was another incident from those days involving the solution of a rather hard problem, posed by a professor whose courses had a tough reputation. Louie Green, as was his wont, posed a characteristically hard problem to Knuth’s class and declared that anybody who solved it would not have to attend his classes anymore, for the rest of the semester. Further, Green declared that the correct solution would fetch the student a hugely coveted A+. To cut a long story short, Knuth solved it, earned an A+, and happily cut classes too. Incidentally, George Pólya himself had solved the same problem in a then-recent issue of the American Mathematical Monthly. As destiny would have it, much later, Knuth would even start off as Pólya’s junior colleague at Stanford, happily acknowledging Pólya’s influence on his own research and teaching. A lot of Knuth’s intellectual flowering on the Case campus was also made possible thanks to many long hours spent on a brand new IBM 650 that had just then been installed at Case. Knuth also undertook consultancy work for a firm by the name of TRW to write compiler software, even while enrolled as an undergraduate student.

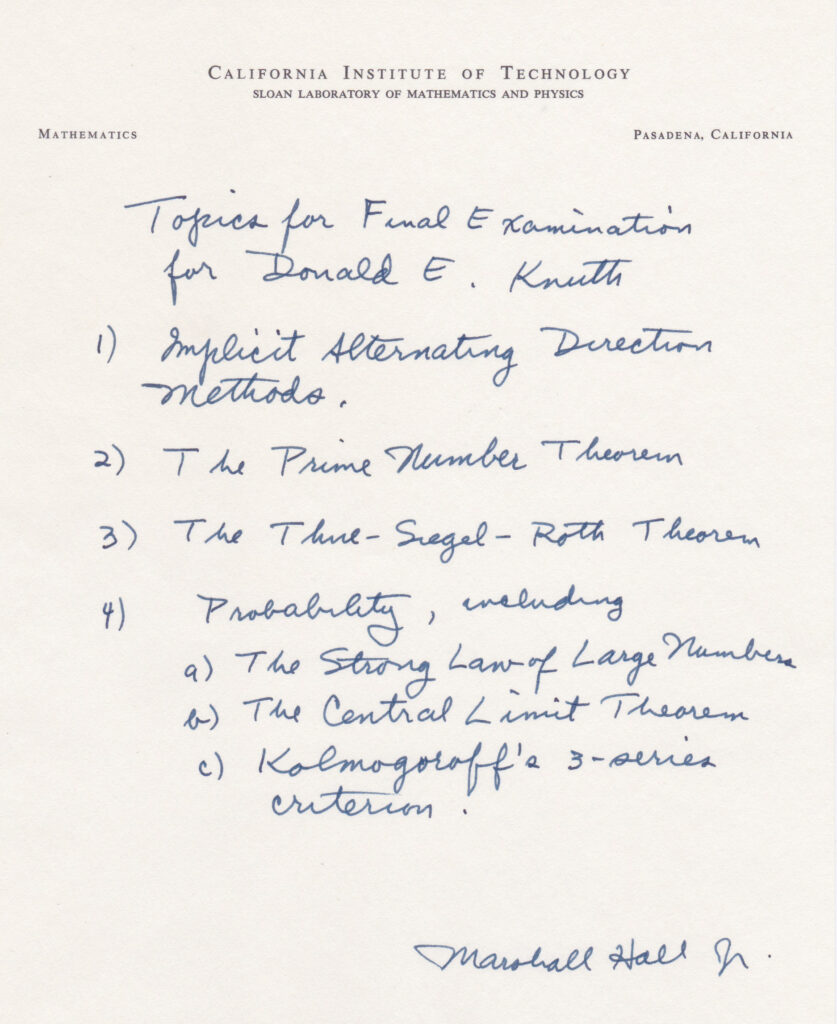

An inflection point in his student life at Case occurred when he sought the opinion of a famous Indian mathematician Raj Chandra Bose,4 who was visiting Case from North Carolina then. Bose too, had posed a tough problem to Knuth’s class in Case, and except for Knuth, the rest of the class had not made much progress. Knuth on the other hand, not only solved it but also wrote a computer program to check his solution, even running it on locally available computers. A hugely impressed Bose, with Knuth as a co-author, even got the solution published in a journal. The problem had to do with the idea of a so-called Latin Square of size 12 x 12. Bose, as the cognoscenti would doubtless recognize, was one among the three “Euler Spoilers”, all of whom had gone on to famously disprove a long-standing conjecture made by the legendary Leonhard Euler. The achievement had instantaneously thrust the trio of R.C. Bose, S.S. Shrikhande, and E.T. Parker from the quiet environs of academia to international spotlight, with the breakthrough even making it to the front page of the New York Times. Clearly, Bose had brilliance, authority, and global prestige. He also instinctively recognized that Knuth too would be a great addition to the global combinatorics community, and strongly urged him to go to Caltech for higher studies. Even more specifically, he advised Knuth to strongly consider working with Marshall Hall, who incidentally was also his collaborator E.T. Parker’s Ph.D. thesis advisor. Knuth took Bose’s advice, went to Caltech, and by the end of 1963 earned his Ph.D. under Hall’s guidance.

Reflecting on those crucial days in his life, and the factors that led him to eventually leave mathematics to pursue computer science, even though he had earned a Ph.D. in mathematics from Caltech, Knuth offers a well-thought-out reply. According to Knuth, people who are good in mathematics and pursue it, broadly fall into one of the four types of mathematical talent—(i) algebraic (ii) geometric (iii) logical-combinatorial, and (iv) continuous-big picture. Knuth always felt most comfortable pursuing its logical-combinatorial aspects. This, he says, ties in easily with the discrete problems usually seen in computing, and this inner calling eventually influenced his decision to study the mathematical aspects of computing. There were other instances too, he recalls. One such happened while on an ocean voyage, during his honeymoon. With his wife temporarily indisposed, Knuth found himself going through Noam Chomsky’s book Syntactic Structures. By the time he was done reading the book, he was completely convinced that mathematics and computing were deeply interlinked. On the other hand, the term Computer Science had not yet been invented, and research on such things was only a fringe topic, largely unexplored.

A quick survey of the still-fledging subject of Computer Science in the mid-to-late ’60s informs us that computer hardware was nowhere as ubiquitous as it is today, with only a handful of large proprietary machines available for commercial purchase. It was not just hardware that was in its infancy. Software was even less developed, and the corresponding situation in academia was not very heartening. Apart from a few forward-thinking campuses in the USA, most universities did not even have individual courses to offer, nor any trained faculty, let alone have fully dedicated departments. Only Stanford and MIT stood out like shiny beacons amidst the entire university landscape; in 1965, Stanford could boast of the very first department in the entire United States solely dedicated to pursuing all aspects of computing.

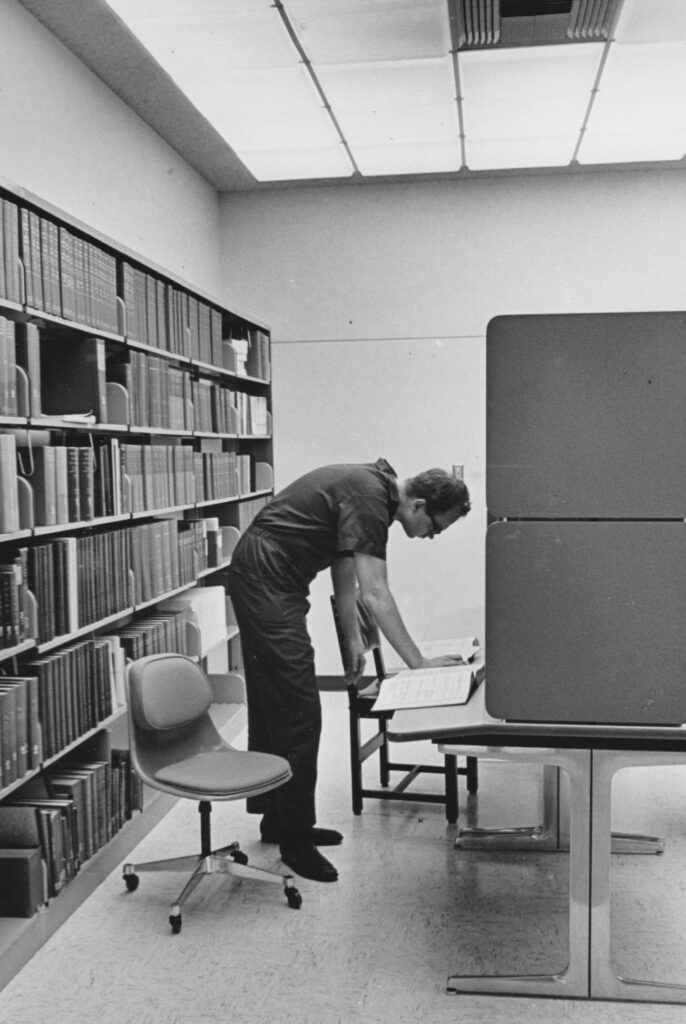

Knuth started his academic career as an Assistant Professor of Mathematics at Caltech, his PhD alma mater. Formally, he was a trained mathematician with a speciality in combinatorial analysis. He enjoyed teaching traditional mathematics courses, especially about discrete subjects; but his attention was progressively getting drawn towards matters that were clearly more computational, in both spirit and essence. He spent considerable time consulting with Burroughs Corporation about software and hardware design. He also became an associate editor of several computer journals. He introduced courses about computation into Caltech’s curriculum. He finally began to realize that his creative sweet spot was beckoning him to investigate the quantitative aspects of algorithm performance.

Elaborating on the reorientation of his overall philosophical outlook to research that preceded his eventual change of career, Knuth notes that there are practical benefits to approaching any scientific subject, by looking closely at its calculational and algorithmic aspects. This approach, he avers, has a deeper significance, because he believes that a person does not really understand something until he can teach it to a computer, essentially highlighting the importance of an algorithmic approach to the mastering of an unfamiliar topic/subject. An example that he is fond of citing comes from Linguistics, where he says

Linguists thought they understood languages, until they tried to explain languages to computers; they soon learned how much more remains to be learned.

Another compelling instance was from his days as a mathematician at Caltech:

For three years, I taught a sophomore course in abstract algebra, for mathematics majors in Caltech, and the most difficult topic was always the study of `Jordan Canonical Form’ for matrices. The third year, I tried a new approach, by looking at the subject algorithmically, and suddenly it became quite clear. The same thing happened with the discussion of finite groups defined by generators and relations; and in another course, with the reduction theory of binary quadratic forms. By presenting the subject in terms of algorithms, the purpose and meaning of the mathematical theorems became transparent.

Viewed from this perspective, the evolution of Knuth’s focus in the ’60s is typical of any pioneer, who while daring to explore a promising new terrain, is acutely aware of the challenges ahead too. Two events from this era turned out to be significant, in retrospect. One: In 1969, Knuth took a bold leap of faith and moved to the Department of Computer Science at Stanford, thereby announcing where his academic interests unmistakably lay. Two: In the ICM held in 1970 in Nice, France, he presented a paper titled “The Analysis of Algorithms”, firmly setting him on course to infuse mathematical rigour and precision into the formulation, design and analysis of algorithms. His colleague Robert W. Floyd had taught him early in the ’60s that computer science strongly needed a firm mathematical basis.

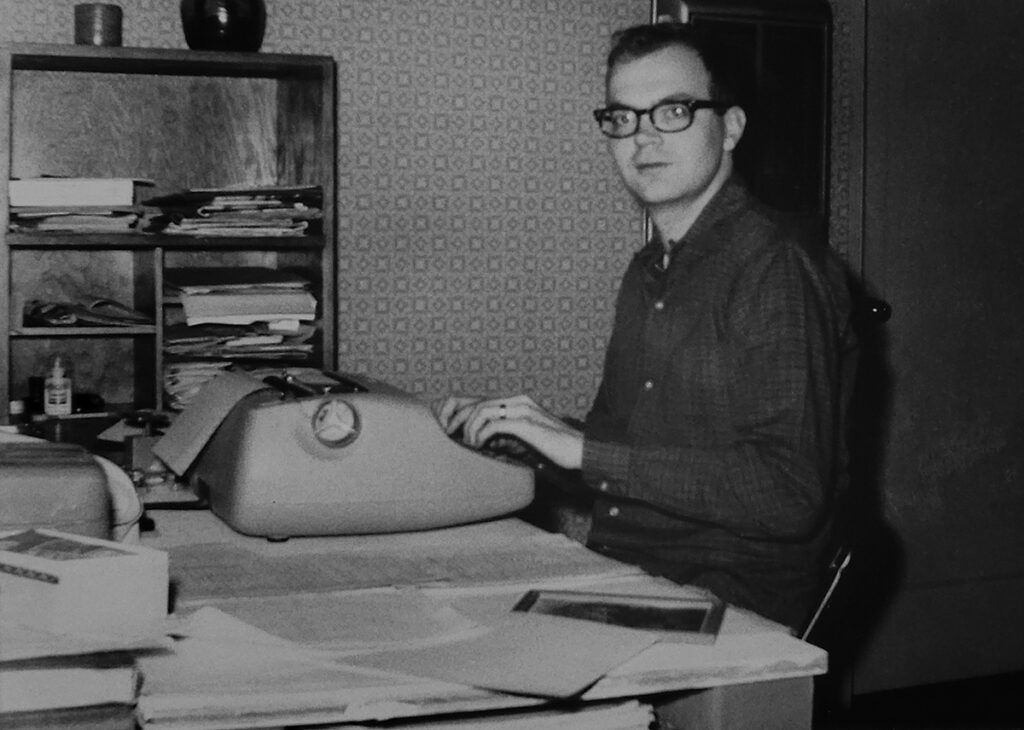

Today, Knuth’s name is synonymous with multiple volumes of his most famous work: The Art of Computer Programming (TAOCP). The events that led to the writing of the book offer a peek into the stellar reputation that Knuth has consistently enjoyed in academic and industry circles, burnished from when he was barely even a graduate student. An offer to write a book on Computer Programming came to Knuth, from the publishing house Addison-Wesley. Still 24 and enrolled as a graduate student in Caltech, this offer was initiated by Richard Varga, a mathematician at Case who was also on Addison-Wesley’s advisory board. Varga had a good impression of Knuth’s compiler software writing skills. Knuth was honoured and thrilled, because many of his favourite college textbooks were published by Addison-Wesley, and he had always loved to write. So in the early ’60s, he was a graduate student working towards a Ph.D. in mathematics at Caltech during the day, and an author working on a textbook on software during the evenings and nights. He was also a consultant to industry, whenever he had a moment to spare. This also meant that he had to refuse and forego two prestigious graduate fellowships, one from the National Science Foundation, and another from the Woodrow Wilson Foundation—because accepting either of them would have prevented him from working on anything besides his college studies.

In 1962, TAOCP was first conceived of as only a single book, with precisely twelve chapters in it. But once he started writing, he realized that the topics he wanted to treat demanded much more attention and detail. Today, TAOCP is not a single book but an elaborate and lavish bouquet of the choicest offerings in computer science, spread over five encyclopaedic volumes. The latest to be out, Volume 4B, was published as recently as October 2022. He hopes to have Volume 4C ready for publication in 2025, resolute and soldiering on tirelessly, when he will be a still sprightly 87!

It was while writing the very first volume of TAOCP that Knuth became familiar with the entire lifecycle of the production of a book. Knuth proudly admits to having “ink” in his blood, a reference to printer’s ink. His father, who worked multiple jobs to run the family back in Milwaukee, owned a mimeograph machine, and he surmises that this perhaps may have subconsciously influenced his later thoughts on digital typography, print aesthetics, and font design for use in computers. His own typographic journey itself started off totally unplanned, beginning with a strong sense of dissatisfaction on how digital characters were printed out onto the pages of a book. Pat Winston, an MIT Computer Scientist, had written a book on Artificial Intelligence, which was the first to be typeset with an experimental digital printing device, and Knuth happened to see the galley proofs. The type quality in Winston’s proofs, though produced with entirely digital methods, was every bit as good as Knuth had ever seen before using conventional metallurgical or optical technology. By comparison, the quality of phototypesetting in the current galley proofs of Knuth’s own TAOCP was miserable; he simply didn’t want to be the author of a book that looked so awful.

Today, the world of computing is deeply thankful for that inner angst. If modern-day computer users and industrial designers enjoy a surfeit of fonts, styles, and a host of other typographic offerings that have helped produce aesthetically printed digital material, much of it can be traced back to the long penance that Knuth immersed himself in, thereby impacting the very foundations of digital typography. An account of the beginning of the entire typography episode, in his own words, demonstrates the missionary zeal with which he has generally taken up momentous challenges, always armed with a quiet confidence that his contributions would eventually turn out to be significant. On leafing through the pages of Winston’s galleys, even while being filled with a uniquely Knuthian combination of disbelief and self-confidence, Knuth said:

I thought pixels just couldn’t cut it. But here, before my eyes, was an example of the highest quality, and I knew that everything that I saw on that page was created by 0s and 1s. It was not created by any mysterious metal process, any mysterious photographic process, or anything that was hidden from me, or scary. It was digital, yet beautiful. So the next morning, I woke up and I knew that my life was going to change. Whoa! I had been faced by this terrific problem about how to make my books look right, but now I was faced with tangible proof that a solution to that problem was all within my power. All I had to do was to find a way to put 0s and 1s on a grid, with 1s representing ink—and you know, I think, I am kind of a conceited guy, and I think I can do 0s and 1s as well as anybody in the world. Not only that. Because I was good at 0s and 1s, and because 0s and 1s were now destined to be the wave of the future for printing, it was my responsibility to think about how to get those 0s and 1s there. Other people needed to use digital typography too. As a computer scientist, I had to work on digital typography to solve this problem, because it wasn’t just holding me back—it was holding a lot of people back.

Knuth’s involvement with his work and books is a perfect example of an unforgiving master’s incessant obsession with his craft, and its own esoteric tools. As he writes new volumes for TAOCP, he publishes the material first in smaller paperback fascicles, dedicated to a specific advanced topic. And as he prepares those fascicles, he posts pre-fascicles online, so that experts in the subject can help him get the story right. An ever-growing legion of admirers and followers includes hundreds of volunteers who help correct errors before the real fascicles and volumes are published. In addition to the books he has authored, he has also created the widely used computer language TeX—the bread and butter for people in both academia, and industry. For a grateful global computing community, his works are akin to precious gems that have been carefully embellished in place by a rare artisan, who chisels away untiringly at his constructions, even as they evolve and take shape.

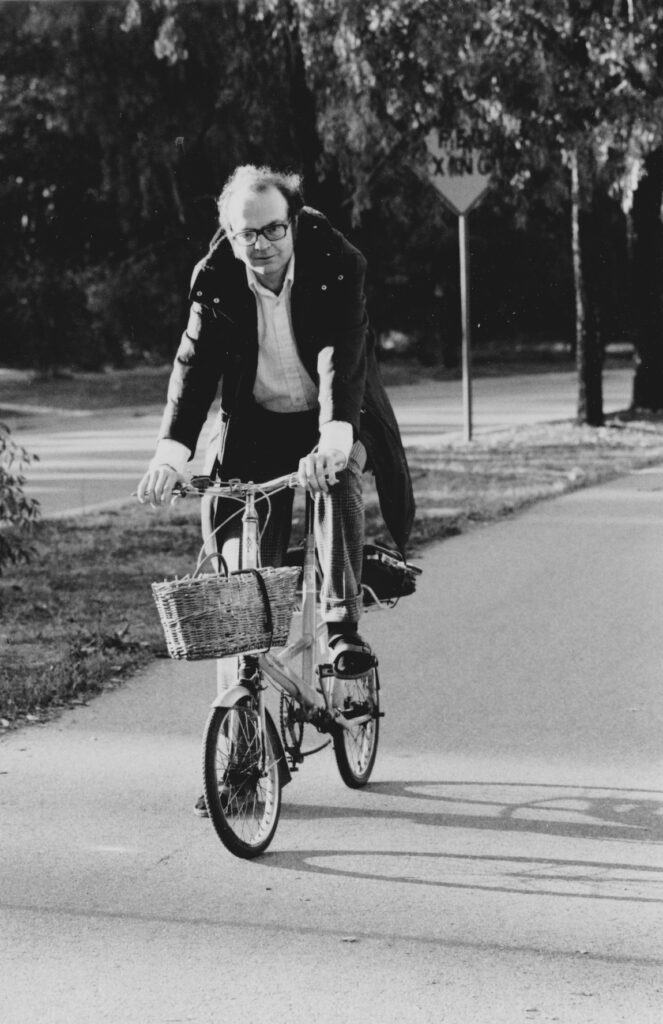

Needless to say, he has a punishing schedule, working on many projects at once. Immersed almost totally in work, music offers his main avocation. His home-converted-to-an-office houses a pipe organ and a large piano, offering him both rejuvenation and inspiration. Though officially retired from Stanford, and no longer obliged to take students, he still occasionally engages with the academic community via talks and lectures. Another uniquely Knuthian quirk that the world of computing has reluctantly gotten used to, is his complete self-imposed isolation from email. He cannot be directly reached by email, and has set up a rather elaborate protocol for people wishing to communicate with him. But that doesn’t stop the irrepressible Knuth from shooting off emails to others, with the recipients of such rare and unexpected communication fully made aware at the very outset, that the traffic is strictly one-way, and controlled solely by Knuth alone! All the rest of the correspondence that eventually reaches him is via the snail mail route, with his secretary at Stanford responsible for sifting through, and shortlisting, what he gets to read. This issue of Bhāvanā carries an accompanying interview of Knuth and is dedicated to him on his 85th birthday.

Originally planned for a late 2021 issue, we are glad that we are finally able to bring the accompanying interview to the light of the day. The ever gracious and supportive gentleman, Knuth read through our set of questions and dashed off a warm reply with characteristic panache. All of his replies to all the questions we posed to him are exactly 280 characters long, demonstrating yet again why the world celebrates his uniquely witty genius. The genial professor, via an appreciative note also apologized for the year long delay; and more than made it up for our readers with his intellect, and enthusiasm.

There is hardly a prize in Computer Science that Knuth has not won. He received the Grace Murray Hopper Award in 1971, Turing Award in 1974, National Medal of Science in 1979, John von Neumann Medal in 1995, Faraday Medal in 2011, in addition to memberships and fellowships of many learned societies of the world. He was awarded the Kyoto Prize in 1996 that came with a cash component of 450,000, and which adjusted to inflation would easily be close to 800,000 in 2023. All of it was given away to four charity organizations dear to the Knuth couple, highlighting how lofty his approach has always been.

To this day, Knuth remains deeply passionate about the historical study of all aspects of Computing. Data structures known as trees express relationships among cascading nodes, and effectively capture genealogies, influences, dependencies, as well as historical legacies. Incidentally, trees have been studied extensively by Knuth. Arieh Iserles again, in his SIAM Review article cited earlier, had this to say on Knuth’s treatment of some of the historical aspects of Computer Science:

Once we consider trees not just as abstract combinatorial objects, but as a means to express combinatorial relationships, they impinge on issues that concerned scholars for millennia: from Chinese tile arrangements to Indian and Greek poetic metres, medieval Kabbalah, medieval music and beyond. The quest for structure, pattern and symmetry underlies human culture and scholarship, even if sometimes it is expressed in the verses of the poets, rather than in mathematical formalism.

Interestingly, half a century ago in 1972, Knuth had studied Babylonian tablets, dating roughly from 1800 to 1600 BCE, to understand how some of their ancient content could be understood, when viewed through the lens of modern-day computing. In fact, by carefully examining some of his remarks there, it is clear that Knuth is in fact arguing for the converse of the above statement:

One of the ways to help make computer science respectable is to show that it is deeply rooted in history, not just a short-lived phenomenon. Therefore it is natural to turn to the earliest surviving documents which deal with computation, and to study how people approached the subject nearly 4000 years ago.

This remark from 1972 is historically invaluable, for it shows how pioneers such as Knuth strove relentlessly, even as recently as fifty years ago, to impart respectability and credibility to a then-fledgling discipline but which now is all-pervasive, having become an indispensable part of our social fabric.

To appreciate Knuth’s philosophy of creativity, a quote he admires from the book The Science of Art, authored by Robert E. Mueller, is particularly relevant:

“It was once thought that the imaginative outlook of the artist was death for the scientist. And the logic of science seemed to spell doom to all possible artistic flights of fantasy.”

Knuth goes on to add that while the scientific approach is generally characterized by the words logical, systematic, impersonal, calm, and rational, an artistic approach is characterized by the words aesthetic, creative, humanitarian, anxious, and irrational. To him, both these apparently contradictory approaches have great value with respect to computer programming. In Knuth’s computational universe, while computer programs created out of the synthesis of the two approaches can turn out to be elegant, exquisite, and perhaps sometimes even sparkling, one must constantly strive to write programs that are grand, noble, and truly magnificent!

Finally, a sign of an individual’s greatness, irrespective of the field of endeavour, is when allusions to a place in the annals of history are frequently invoked, even as the protagonist meditatively chips away at his workbench, unmindful and with nonchalance. A constantly striving spirit’s relentless quest to reach a higher plane, is our true collective inheritance from the Don of the Art of Computer Programming!

On behalf of Team Bhāvanā and the History of Mathematics in India (HoMI) project of IIT Gandhinagar, it is both our pleasure and privilege to invite you to share with us a personal account of your immensely productive life, and your pioneering works in the area of computer science. We wish to dedicate this article appearing in this issue as our humble tribute, and a gift on your 85th birthday.

DK: I’m deeply honoured that you wish to interview me, because I love the spirit of your magazine. How I wish there had been a similar magazine that had once conducted such interviews of (say) Euler, Gauss, and Ramanujan when they were still alive! I certainly don’t come close to filling the shoes of any of those giants; but I firmly believe that everybody can learn by studying the insights of creative people.

We learn that you have German ancestry and that you were quite a precocious child. Tell us about your childhood and your family, and also about how your name is pronounced.

DK: My dad Ervin Knuth (kaNOOTH) was a teacher in Lutheran schools. My mom Louise managed buildings in downtown Milwaukee. I enjoyed music and grammar, also wrote corny jokes. Was poor at sports and art. Spent many hours drawing graphs. Learned to identify many trees by their leaves.

Growing up in post-WWII America’s boom years, you had access to excellent public schools and community libraries. Did you find yourself lost in books then, and more so, were there any positive influences and incidents from those years, both at home and outside, that shaped your boyhood and young adulthood?

DK: At age 4 I was the youngest “bookworm” in Milwaukee’s library. Once was lost in that library, having stayed in the stacks past closing time. Went to parochial school where my teachers taught us to love others. Many chances to sing and to play music, to enjoy nature. Knew no math.

A television program aired while you were still in school provided a key bridge that connected language sentence structure to diagrams, and deeply impacted your lifelong appreciation for languages, and their internal structure. Please elaborate on this impactful event.

DK: My 7th-grade teacher introduced us to diagramming sentences. My friends and I tried to apply it to non-textbook examples, with limited success; but we learned a lot in the process. The TV program was different: I won a contest to find as many words as possible from given letters.

Post-WWII, America was not only making huge advances in science, but was also building computing machines that were available off the shelf. When did you first hear about, lay your hands on, and eventually go on to program a digital computer? Also, did you realize then that it was going to evolve to be such an intensely passionate journey?

DK: I probably first heard about UNIVAC on election night 1952, when I was 14 years old. I saw a real computer—the IBM 650—first in 1956, as a college freshman at Case. We were allowed to touch that machine, sitting at the console and feeding cards to it. I was hooked for life.

Let us dwell a bit more about your life at the erstwhile Case Institute of Technology, now Case Western Reserve University. You joined there when you were 17, and by 22 had earned a BS, and an MS too. What subjects did you study during those heady and busy days in Case?

DK: Freshman classes (physics, chem, calculus, civics, writing); sophomore (astronomy, basic math, diff geometry, physics2, history, speaking); junior (algebra, topology, electrical engineering, literature, numer analysis); senior (automata, combinatorics, logic, complex variables).

Apart from excelling in studies, you were editing a student campus magazine, creating probabilistically founded algorithms for rating Basketball players via win probabilities, and even programming an IBM machine on campus. Walk us through those four intense years at Case, please.

DK: Marched in the band, copy-edited the newspaper, was fraternity vice-president, fell in love, managed sports teams, wrote compilers and assemblers, entered math competitions, edited magazine and student handbook, watched plays and orchestra rehearsals, wrote a short musical comedy.

California Institute of Technology (Caltech) in the ’60s had the luminous Richard Feynman, and Murray Gell-Mann in Physics. In Math, it had the couple Jack Todd and Olga Taussky-Todd, both with strong interests in aspects of computing. Yet, when it was time to think of a PhD, you chose Mathematics in Caltech, and went with Marshall Hall Jr., a well known expert in Combinatorics. How did this choice of University and guide come about, and what was your PhD thesis about?

DK: R C Bose taught me combinatorics and inspired me to work with Hall. I planned at first to study designs with \lambda=2. But one day I happened to construct new kinds of non-Desarguesian projective planes, solving a conjecture, and Marshall told me that that should be my thesis.

Your PhD days were apparently already getting partitioned by your internally competing dual interests, Computing and Mathematics. We hear that you were also a consultant to Industry those days, even earning significantly more during the summer breaks via consulting, than the annual salaries of newly minted Assistant Professors! Was Hall sympathetic towards the dual hats you were donning then? Further, why did you later decide to completely give up consulting?

DK: Yes, Hall was an early believer in the power of computing to help develop combinatorial theory. Consulting was my connection to the newfangled field of Computer Science. I taught computing at Caltech, even as a math Prof. But I stopped consulting when becoming a Stanford CS Prof.

Around the same time, via a contract to author a book on Computer Programming, you had a realignment of academic interests that would eventually much later impart to you a sharper focus, along with a new identity in the world of academia. Please take us through this phase in your career, and the key personal milestones from this period.

DK: A representative of Addison-Wesley, the publisher of my favorite textbooks, met with me in January 1962 and invited me to write a new book about compiler-writing. That was exciting because existing publications were poor, one-sided, often contradictory. I’d always liked to write.

After your PhD, you joined the Department of Mathematics in Caltech as an Assistant Professor. But deep within, you were restless and constantly churning internally about being unable to pursue the various avenues that had opened up in your mind regarding Computing, and which by then had also grown to be an intellectual stirring, impossible to ignore. Why, how, and since when did Computing begin to occupy almost all of your serious academic interests?

DK: During the summer of 1962, my friend Bill Lynch and I wrote a FORTRAN compiler for UNIVAC SS80 computers. One day I decided to explore why its “hash algorithm” worked well; and got lucky: I saw how to solve that problem, and realized that many similar yet-unsolved problems exist.

Caltech’s own surprisingly primitive computing infrastructure in the ’60s was contrasted by excellent computing facilities available in the Jet Propulsion Laboratories (JPL) next door. Was this also broadly symptomatic of Caltech somewhat lagging then, in its overall focus on advances in Computing that were happening elsewhere? Was this one of the triggers for your eventual departure from there?

DK: As a consultant, I had easy access to excellent Burroughs machines near my home. Almost every university had primitive computing facilities at that time. Stanford was an exception: George Forsythe understood that computer science was a new field with deep intellectual challenges.

Volker Strassen, Stephen Cook, and you were three young mathematicians who, between the late ’60s and the mid-’70s, contributed significantly to our present understanding of the complexity and analysis of algorithms. While Strassen focused on the complexity of linear algebra and matrix operations, Cook’s work was founded more on the logical and theoretical aspects. How did you end up carving out your own path, so different from your contemporaries like Victor Pan, James Wilkinson, Shmuel Winograd, and others who were pursuing problems related to Numerical Analysis and Scientific Computing?

DK: My initial focus was on programming languages; I became editor of that section in CACM, then JACM. While writing my book I soon learned that compiler writers were also developing techniques of general interest, and that it was great fun to analyze those algorithms quantitatively.

At the ICM of 1970 (Nice, France), the very title of your paper “The Analysis of Algorithms” was a novelty. What crucial idea did you want to convey, even via your choice of this novel title? It is of historical context to appreciate that even as recently as in the early ’70s, computer science aroused no great interest except perhaps in a handful of campuses, and a few avant-garde companies.

DK: At the end of the 1960s, computer science was tripartite: Numerical analysis, programming languages, artificial intelligence. But none of those titles quite matched my interests. So I made up the name Analysis of Algorithms, whose first definition was: the part of CS I like best.

The late ’60s coincided with your departure from Caltech. Still barely 30, you joined Stanford as a full Professor of Computer Science. This move was made easier by George Forsythe, a benign and commanding presence, both at Stanford and also in the larger world of computing, and whom you have even likened to a “Martin Luther of the computer reformation”; also your thoughts on a deep friendship with another Stanford colleague, Robert W. Floyd.

DK: Floyd and I had been friends since we met in 1962. We had thrilling correspondence re Bose–Nelson sorting networks. We wanted to be eventually at the same university. Everywhere but Stanford we’d have to help build a leading department; Forsythe had already done that beautifully.

To pun, you happily checked into Stanford in 1969, and have never left since then! Computer Science at Stanford was spun out of its mathematics department, and the latter had some brilliant mathematicians on its roster. There was the majestic and statesmanly Kunihiko Kodaira, and his frequent collaborator Don Spencer. There was the Hungarian duo Gábor Szego̎ and George Pólya, both men exceptional in pedagogy as well as research. Charles Loewner, Paul Garabedian, Paul Cohen, and Solomon Feferman also belong to that era. Your thoughts on your illustrious colleagues.

DK: Stanford had great mathematicians, but almost all in analysis with a token algebraist. Cohen was brilliant, yet thought combinatorics trivial. Pólya was a wonderful exception; my other math colleagues were Dantzig in OR, plus people like Berlekamp, Gale, Karp, Lehmer at Berkeley.

Paul Cohen, a senior colleague at Stanford, started off with interests in analysis, before a pleasantly fateful post-dinner conversation with a logician colleague Sol Feferman in a joint Stanford-Berkeley Colloquium got him interested in Kurt Gödel’s ideas on the continuum hypothesis. This serendipitous event apparently spurred Cohen to make major advances in Logic, finally even winning him a Fields Medal, awarded in 1966. Did you too find the Berkeley–Stanford Colloquium of those days a stimulating experience?

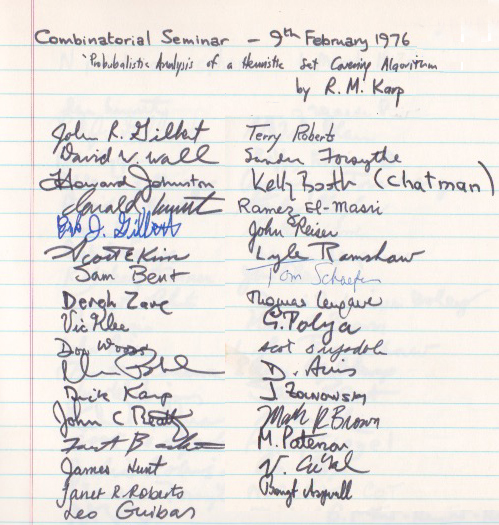

DK: Cohen was legendary also at Caltech. But I actually never heard about a Berkeley–Stanford math colloquium; at math talks I often asked myself “so what, so what?” I started a series of weekly combinatorial math seminars at my home, and people from Berkeley would often participate.

In a meeting held in Bucharest in 1971, you state: “The text of my sermon today is taken from Plato (The Republic, vii) who said, `I have hardly ever known a mathematician who was able to reason’. If we make an unbiased examination of the accomplishments made by mathematicians to the real world of computer programming, we are forced to conclude that, so far, the theory has actually done more harm than good. There are numerous instances in which theoretical `advances’ have actually stifled the development of computer programming, and in some cases they have even made it take several steps backward. I shall discuss a few such cases in this talk.” Your thoughts on theory and practice.

DK: A week before the Bucharest Congress I spoke at the IFIP Congress in Ljubljana about the beauties of theory. Thus I could straddle both sides of the fence. I’d seen both extremes when writing Volumes 1, 2, and 3 of The Art of Computer Programming. Everywhere we find yin and yang.

Your ten-year-long aesthetic pilgrimage in the ’70s, taken up to radically overhaul the very foundations of printing and publishing, heralded a brand new dawn for typesetting. In 1979, the Bulletin of the American Mathematical Society carried your article titled “Mathematical Typography”, which you incidentally dedicated to George Pólya on his 90th birthday; and where unsurprisingly, you cite bibliographical references dating back to Luca Pacioli of Venice from 1509 CE. How did the idea of a rigorous approach to the aesthetics of typesetting even begin?

DK: I loved books and alphabets since childhood. I loved the look of Addison-Wesley’s texts. I spent many years as copy editor, journal editor, and proofreader. Computer science needed new typographic tricks, which I helped to formulate. Many fine printing establishments were nearby.

“Every reasonable algorithm suggests interesting questions of a `pure mathematical’ nature; and the answers to these questions sometimes lead to useful applications, thereby adding a little vigour to the subject without spoiling its beauty”—are words drawn from your ICM 1970 address. Taking the letter “S” as a representative example for fonts, how did you geometrically formulate the problem of finding the “most pleasing curve” that would represent “S”, thus fusing mathematics with creative aesthetics, even while still operating in a purely algorithmic context?

DK: The letter S suggests a beautiful geometry problem of matching an ellipse to a tangent, solvable with classic primitives. Fitting curves to rasters, breaking paragraphs to lines, and many other appealing math challenges arise naturally, summarized in my 1985 lecture at Epidaurus.

Nobel Prize winning physicists, Paul Dirac and S. Chandrasekhar, have both eulogised beauty and its intimate kinship with truth, in their own characteristic ways. The inimitable computer scientist Edsger Dijkstra had a famous quote: “I find style important in Programming”. Set in the general spirit of these three titans, is the relentless and rigorous pursuit of truth, a time-tested approach to create works of lasting aesthetic beauty, across the spectrum of creative human endeavours?

DK: EWD3165 also said that everyone should “find their own style”. And I believe everyone should find their own beauty. But curiously, I don’t recommend finding one’s own truth! To me, truth is totally objective. And I’m glad there are mysteries, whose truth or falsity can’t be known.

Combinatorics and its various avatars have continuously held your attention for the past six decades now. If at all individual humans can be roughly partitioned as belonging to either those who naturally appreciate the universe of discrete objects (graphs, numbers, notes of music, finite fields), as against those for whom the universe of continuous objects seems naturally pleasing, where do you position yourself?

DK: I’m clearly at the discrete end of that continuum. (However, surreal numbers are much richer than the continuum itself.) Like Leibniz, I like to think of everything as made from 0s and 1s. We take limits when that’s a useful approximation, and when we understand what limits mean.

When we think of discrete objects and their study, especially in the 20th century, it is almost inevitable to think of Paul Erdõs. You and he shared many common interests, and were both also incidentally, invited speakers in ICM 1970, (Nice, France). Your Erdõs number though is 2, and not 1 as most people would expect, eyes closed. Tell us a good Erdõs story!

DK: When I met him in Nice, I proudly mentioned that I’d discovered the surprising formula l(382)=l(191) in the theory of addition chains. Without blinking he immediately asked if there were infinitely many cases with l(2n)=l(n). (That result was proved by Thurber three years later.)

There is a story of how Bill Gates wanted to make a big donation to Stanford’s Computer Science Department. This is the same Bill Gates, who Christos Papadimitriou recalls as being barely interested in research, even though their joint paper had been accepted for publication in a mathematical journal. Please fill us in on the story behind the donation.

DK: A few hours before Bill’s visit, I’d been thinking about the way random graphs evolve, at their big bang. From the transition probabilities, I realized that the denominator 17017 was the key to the whole pattern. I excitedly drew the picture on the blackboard; Bill was impressed.

In 2016, while delivering the Paris Kanellakis Memorial Lecture, you spoke about “Hamiltonian Paths in Antiquity”. In it, you mentioned the contributions of various Indian mathematicians to the world of Combinatorics. You also dwelt in detail on how the Sanskrit language was used to creatively convey combinatorial truths, usually set in a lyrical metre that lured people to dwell on the combined joys of reading, counting, and calculating. Does ancient India appeal to you as a civilizational setting where many of your own interests converged in potentially interesting, and insightful ways?

DK: Yes! The ninth century Kashmiri poets who created ingenious “citrakāvya” certainly rank among the pioneers of combinatorics. The study of Sanskrit prosody has also advanced other parts of mathematics. But only a few scholars remembered them, after other ideas became fashionable.

Personally, do you feel a closeness to the Indian approach to Mathematics, as espoused by early Indian pioneers? The Indian approach was pragmatic, and had focused on solving concrete problems, consciously eschewing an approach that sought to first theorise, or further, even axiomatise the subject. The manifestations of this Indian school of thought pervades the works of Āryabhaṭa, Bhāskara, Brahmagupta among others.

DK: I don’t agree that the Indian approach has been entirely pragmatic, instead of curiosity-driven. I immediately felt strong kinship with Nārāyaṇa when I learned of his Gaṇita Kaumudī (1356), since I’d played with exactly the same concepts in 1961! He must have felt lonely in 1356.

You have admitted a close kinship with languages, especially their inner structure. Right from your school days when you were introduced to diagramming sentences, to the time you were studying Noam Chomsky’s Syntactic Structures during your honeymoon, and even up to now, languages have fascinated you. Could you please throw light on the work of the Indian grammarian Pāṇini, and how his work done many millennia ago has relevance to the modern-day study and use of computer languages.

DK: Mathematics is the science of patterns. Languages are perhaps the most complex artifacts of civilization. Pāṇini had the profound insight that Sanskrit had patterns that could be formalized and put into a logical system. He gave his commentators millenia of rich food for thought.

From the viewpoint of Natural Language Processing (NLP) applied to Sanskrit, when one attempts to algorithmically unravel the intended semantic interpretation of a given sentence, an immediate challenge that is faced is the sheer variety of possible emergent meanings, each significantly different from the other, all thanks to the subtleties of word splittings in Sanskrit. Even a slightly differing splitting of a compound word can result in a vastly different semantic interpretation. How does one algorithmically tame, or rein in such undesirable situations?

DK: My knowledge of Sanskrit is entirely third-hand, but I’ve been told it’s this very ambiguity that has made things like citrakāvya possible and inspiring. On the other hand, modern NLP and ML methods seem to have made the complexity manageable, once enough data has been gathered.

The above example inspires us to invoke the domain of very large numbers. Your good friend, the late Ronald Graham is associated with an eponymous number that is also among the largest numbers ever known to be used in the published proof of a mathematical statement. Isn’t the idea of a “largest positive number” an oxymoron, because there is always a number just one greater than itself.

DK: No, the largest positive number used in a published proof isn’t an oxymoron (nor is it a constant)! My lecture on Coping With Finiteness cited Super-K = 10\uparrow\uparrow\uparrow\uparrow3, which is way bigger than Ron’s number; I think it’s too large to actually be comprehended.

Recently, as reported in the 4 February 2021 issue of Nature, a team of Israeli computer scientists used ideas from Gradient Descent Optimization to find mathematical expressions for fundamental constants seen in nature such as “e” and “\mathbf{\pi}”. Calling their algorithm the Ramanujan Machine, they even found novel continued fraction expressions for the Catalan constant, a ubiquitous object in combinatorial settings. The subject of combinatorial identities itself is an old and well-studied subject, and you have even written a preface for Marko Petkovsek, Herb Wilf and Doron Zeilberger’s classic, itself quirkily titled A = B. Mother Nature never ceases to amaze us, does she!

DK: Indeed, I keep running into apparently new patterns that involve only very elementary ideas. For example, I spoke to Zeilberger’s seminar in January about the fascinating Tchoukaillon array, which contains every positive integer exactly once; its properties are mostly unexplored.

In the 10 June 2021 issue of Nature, researchers from Google Brain working on a particularly thorny, decades-old problem in VLSI Design called Chip Floorplanning, made a breakthrough that reduces otherwise painstaking and expensive effort expended by a team of human experts working for many months, to a mere 6 hours. Hailed as a breakthrough by both industry and academia, Deep Reinforcement Learning, the learning framework behind the advance, is ideally suited for problems involving combinatorial data. Considering the centrality of combinatorial ideas in your approach, can readers expect relevant material on AI and ML in coming issues of TAOCP?

DK: No; AI and ML are topics that others can write about far better than I. Of course there are many flavors of learning and many flavors of combinatorics. For example, TAOCP does discuss reinforcement in message-passing models of random satisfiability problems. But that’s different.

In the January 2021 issue of Bhāvanā, when David Mumford was asked what he thought of Deep Learning, he said that he was initially sceptical until he came across related work by Chris Manning. We quote Mumford verbatim: “Manning asked these two questions: `How do these deep learning algorithms which analyse sentences work? Are they `discovering’ grammar?”. Mumford goes on to say that he was eventually convinced that Manning’s creation of a suitable high-dimensional representation of words already implicitly carried inside them, the till then elusive grammar of the sentence. Will we see machines leading humans not just in mechanical tasks, but in intellectual tasks as well?

DK: I’m still with Mumford’s former self with respect to not believing that ML can find discrete things like orthogonal Latin squares (loved by me and my mentor R C Bose). The big potential problem is that nobody is able to understand how deep neural networks reach their conclusions.

On the P = NP question, you earlier held the position that P is not equal to NP. But, we hear that you now hold a different viewpoint. Was this change triggered by Laszlo Babai’s treatment of the Graph Isomorphism Problem? Or did it have to do with Peter Shor’s Quantum Algorithm that successfully took on the classical RSA algorithm?

DK: No. I suspect that P=NP because a polytime algorithm might exist without being comprehensible (even more so than Super-K). Existence is far different from embodiment. Robertson and Seymour showed that polytime algorithms for some graph problems exist, yet are probably unknowable.

The great Israel M. Gelfand listed Beauty, Exactness, Simplicity, and the Craziness of ideas, as what mathematics essentially shares with both music and poetry. Since you are in equal parts both scientist and artist, as your uniquely crafted designation at Stanford stands testimony to, do you believe that in order to create ideas of lasting aesthetic value, the four ideas listed here are necessary and sufficient? What about passion?

DK: Hmm. Why did Gelfand leave out “fun”? Also, the Japanese philosophy of Wabi-Sabi explicitly denies that Exactness is necessary, or sufficient. My musical and poetic friends aren’t fans of exactness either; perfect rhythm is too dull. Passion and je-ne-sais-quoi6 are indispensable.

Active participation in the Lutheran Church, alongside a passionate pursuit of music, have both been a big part of your entire life outside of academics. How has music itself influenced your appreciation of combinatorics, or is it the other way around? In a related context, do you see parallels between music and combinatorics, especially in the works of the masters?

DK: Anybody who looks at the scores of Tchaikovsky, say, realizes that he was a great combinatorialist. There are strong historical ties (works of Sār\dot{\rm n}gadeva, Mersenne, and Schillinger explicitly, Bach implicitly). This week I’m enjoying the fantastic combinatorics of Burt Bacharach.

From Fairchild Semiconductors to SUN Microsystems to Google, a host of hugely successful and profitable technology companies have all been Stanford babies at some point. Did you ever personally harbour entrepreneurship dreams, considering that you are the world’s foremost guru of Programming, and have overseen and presided over many advances that have provided the ideological fuel to the revolution happening literally outside your window, in Silicon Valley?

DK: I understand why my friends find fulfilment when they supervise others or affect the marketplace. But the profit motive has always been furthest from my thoughts, after I had a stable job. I envy astronomers, because people understand that astronomers are motivated by curiosity.

The history of Combinatorics is rich and filled with amazing personalities and achievements. Since you love setting ideas in their proper historical setting, as evident in the allusion to a manuscript from 1509 CE in the bibliography of your Bulletin of the AMS article on Typography, we would like to know who your own heroes of combinatorial thought are. Since this may be a potentially ill-posed question owing to the sheer antiquity of the subject, let us focus on the last six centuries, in linear order starting from the 16th century onwards till date, and one for each century.

DK: Great question! Girolamo Cardano (1501–1576); John Wallis (1616–1703); Leonhard Euler (1707–1783); James Joseph Sylvester (1814–1897); Jack Edmonds (1934–); László Lovász (1948–). And let me add Richard Stanley (1944–), a student in the first calculus class I taught (1963).

|

|

You have been widely feted, and have won the Turing Award, the Kyoto Prize, the National Medal of Science, the John von Neumann Medal, the very first ACM Grace Murray Hopper Award. There is even an asteroid in deep space by the name 21656 Knuth. But surprisingly, you now choose to stay away from email and electronic communication, preferring to solely use secretarial help to sift through sack loads of snail mail. How do you manage your grinding daily schedule between keeping up with future issues of TAOCP, and other academic responsibilities such as being the Oracle of a grateful, and appreciative community of Computer Scientists and Programmers?

DK: I have no TV. I’ve been retired since 1993. I work in “batch mode”, not “swap-in-swap-out”. I write and rewrite TAOCP one word at a time. I swim almost every day. Take frequent naps. Play piano and organ. Go to Stanford Theatre. Have loving family. Have myriad helpers. Chocolate.

Richard Hamming said, “The purpose of computing is insight, not numbers”. To set the context here, here is a quote by the late Vladimir Voevodsky (Fields Medal, 2002) who envisaged computers working as assistants to professional mathematicians, helping them automatically verify the correctness of proofs that they come up with. “The world of mathematics is becoming very large, the complexity of mathematics is becoming very high, and there is a danger of an accumulation of mistakes. Proofs rely on other proofs; if one contains a flaw, all others that rely on it will share the error.” Is this the harbinger of a more synergetic intellectual relationship between man and machine?

DK: (Also, the purpose of machine learning should be insight, not results!) It is wonderful that computers complement human capabilities, not only for verifying proofs but also symbolic calculations, etc. Of course computer programs can have mistakes; so can their proofs of validity.

Humour in uniform has evidently kept you in good humour all these years! In a course titled “Concrete Mathematics” that you offered across departments at Stanford, and for which you were teaching from your eponymous book, you had students hoping that they were going to learn some “hard”, and not “soft” mathematics. When they heard you say in class that there would be no “Theory of Aggregates”, nor “Stone’s Embedding Theorem”, and not even the “Stone-C̆ech Compactification”, a disappointed bunch of civil engineers quietly left the room. Your uninhibited comments!

|

|

DK: The “graffiti” in the margins of Concrete Mathematics, mostly contributed by Princeton and Stanford students when Ron and I first taught from preprints of the book, have clearly been successful: Any errors they contained were discovered by readers before all mistakes in the text.

We understand that “Prediction is difficult, especially of the future”. We also learn that you are not too fond of making prophecies. But still, we want to hear your views on developments that are welding the core of computing, with mathematics and physics like never before. What should the Don Knuth of the Quantum Machine Learning Era of the 21st Century, say a teenager running a program on a Public Quantum Cloud from somewhere in interior India be aware of, even while he/she prepares for the challenges and opportunities ahead?

DK: I’ve tried unsuccessfully to understand quantum computing. Maybe there are people who understand it but can’t fathom the kind of computing that I do. All I know is that there seem to be two completely different things, both called “computing”. Everybody should follow their own star.

\mathbf{\infty} Thank you for the exhilarating questions. Please continue to interview others.

Dear Professor Donald Knuth, this interaction has been an inspiring experience for us, even as we walked down memory lane with an accomplished pioneer. We wish you many more years of robust health, and continued success in research, and other pursuits of life. Thank you! \blacksquare

Footnotes

- today’s Case Western Reserve University. ↩

- The ensuing URL has a video link featuring Don Knuth, Phil Heim and their basketball team: https://www.youtube.com/watch?v=dhh8Ao4yweQ\&t=4s.↩

- Newsweek: 53(1), (5 January 1959), page 63: “What’s That About a Score Card? A Computer’s the Thing”. ↩

- “Raj Chandra Bose: Universal Mathematician”, by H. Howard Frisinger and Indranath Sengupta. Bhāvanā, Vol 6(2) April 2022. https://bhavana.org.in/raj-chandra-bose-universal-mathematician/↩

- “A Short Introduction to the Art of Programming” by Edsger W. Dijkstra; Paperback, 97 pages, published in August 1971 by Technische Hogeschool Eindhoven. ↩

- The meaning of the French expression `je-ne-sais-quoi’ is with reference to something (such as an appealing quality), that cannot be adequately described or expressed. ↩